The reporting-connector is a specialized Java based component used in ETL (Extract, Transform, Load) jobs to facilitate the extraction, transformation, and loading of reporting data from various sources into a centralized reporting database. This component is designed to streamline the reporting process by providing a robust and flexible solution for integrating disparate data sources and preparing the data for analysis and reporting.

Key Features

-

Data Extraction:

-

Multiple Data Sources: Supports extraction from data sources such as databases (MongoDB).

-

Custom Queries: Allows the use of custom queries for precise data extraction tailored to specific reporting needs.

-

Incremental Load: Supports incremental data extraction/batch data processing to optimize performance and reduce load times by extracting only new or modified data since the last load.

-

-

Data Transformation:

-

Data Cleansing: Includes features for data cleansing such as removing duplicates, handling missing values, and standardizing data formats.

-

Data Mapping: Provides mapping capabilities to transform source data fields into the desired target schema.

-

Aggregation and Calculation: Supports aggregation and calculation operations necessary for generating meaningful insights and metrics.

-

-

Data Loading:

-

Target Systems: Can load data into various reporting databases ( currently MySQL and MSSQL).

-

Batch data processing: Supports batch and configurational data loading to accommodate different reporting requirements.

-

-

Monitoring and Logging:

-

Detailed Logging: Maintains detailed logs of all ETL operations for audit purposes and troubleshooting.

-

Use Cases

-

Business Intelligence: Aggregating and transforming data from multiple sources to create comprehensive reports and dashboards for business analysis.

-

Data Warehousing: Loading transformed data into a centralized data warehouse for long-term storage and analysis.

-

Operational Reporting: Generating operational reports by extracting data from transactional systems and loading it into a reporting database.

-

Regulatory Compliance: Ensuring data is processed and reported in compliance with industry regulations and standards.

Reporting Connector technical insights

1. ETL Jobs

2. Reporting Database Schema

Reporting Connector setup using Talend Open Studio.

Pre-requisites

-

Download Talend Open Studio For Big Data / 8.0.1.

-

Clone the reporting-connector project from the develop branch using the following command.

JavaScriptgit clone https://gitlab.expertflow.com/cim/reporting-connector.git

Setup

-

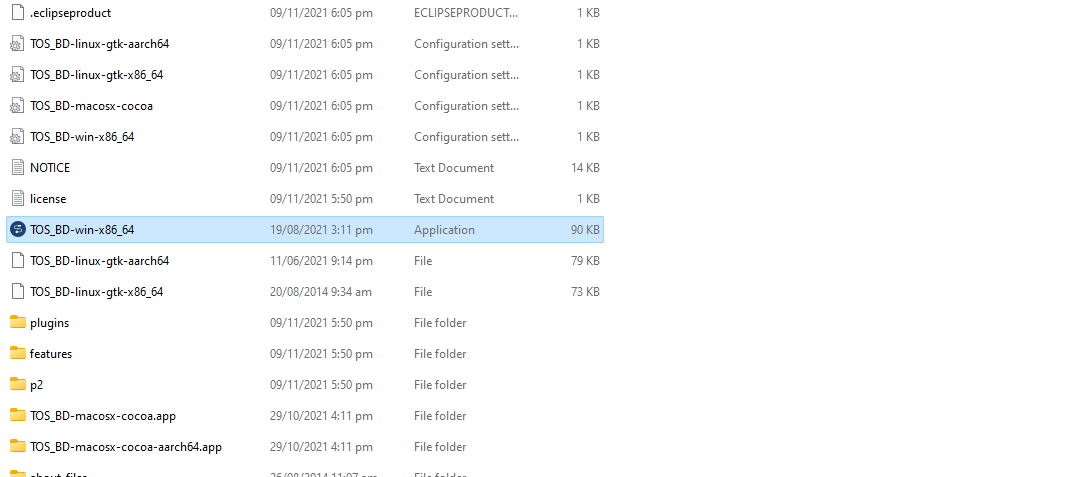

Navigate to the downloaded Talend setup directory into your local system.

-

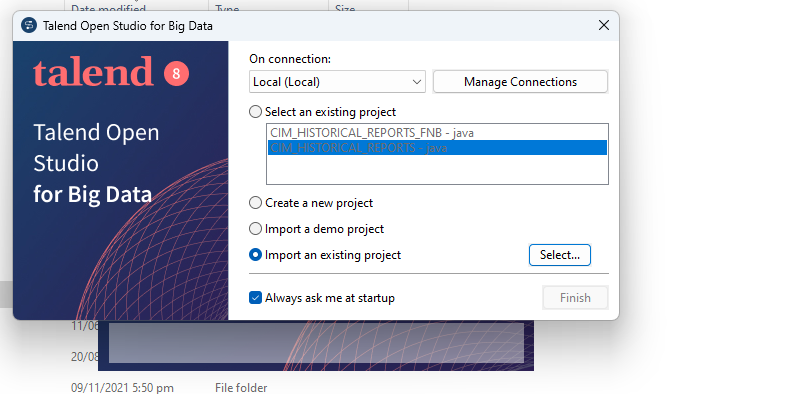

For Windows click on TOS_BD-win-x86_64 to start Talend Open Studio.

-

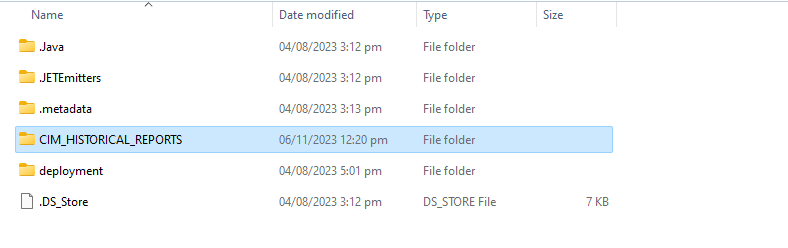

Click on import an existing project and select project from reporting-connector ( local repository ).

-

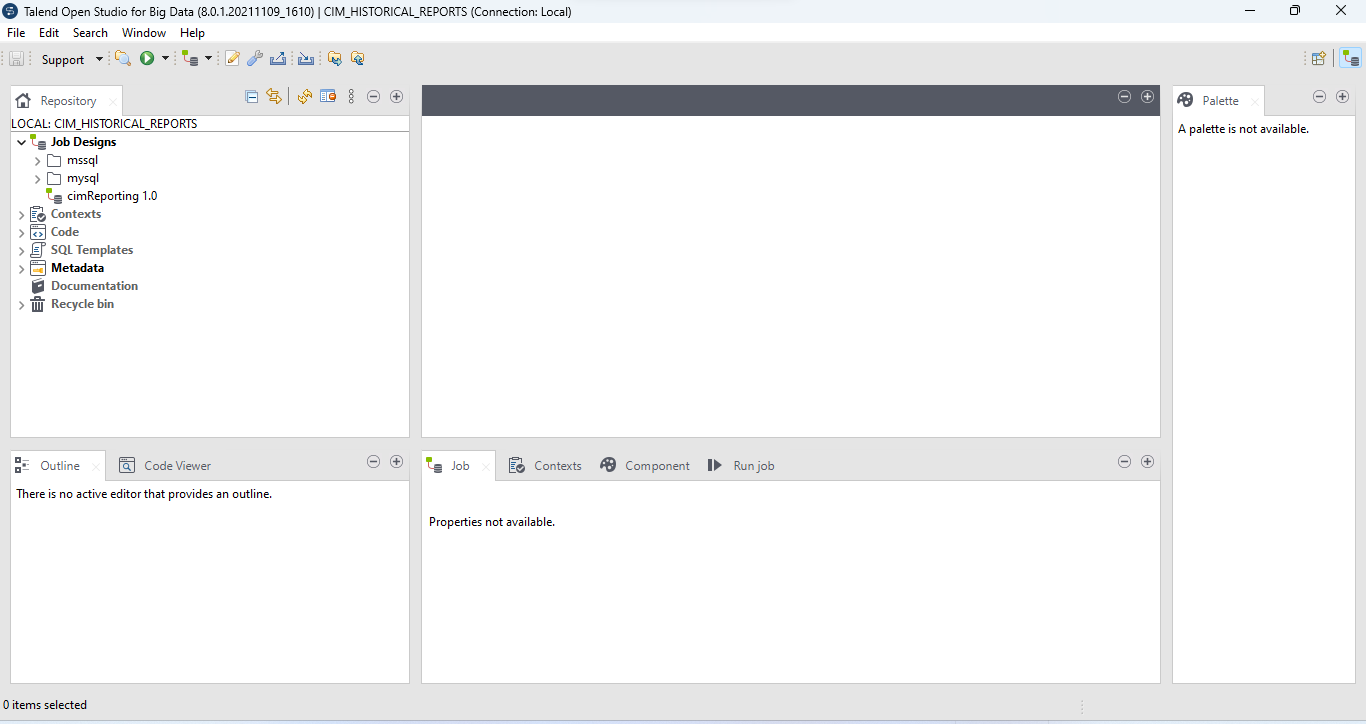

This is how the Talend UI looks, with distinct directories for MySQL and MSSQL ETL jobs.

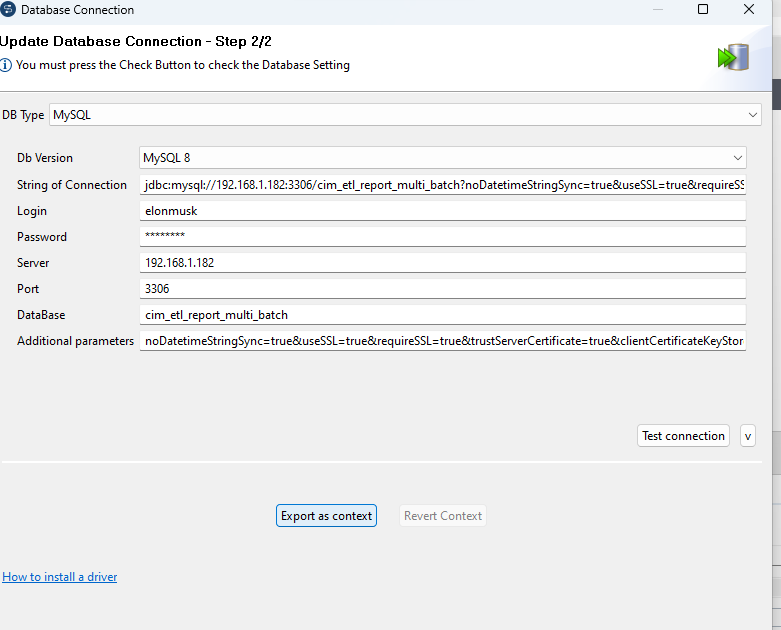

Database connection setup for SQL

It is assumed that you are already familiar with creating MySQL databases and have right access of MySQL server.

MSSQL connection would follow a similar procedure.

-

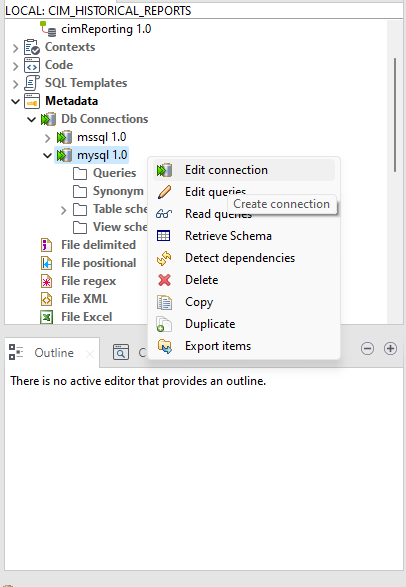

Toggle to Metadata ->Db connections ->mysql 1.0 and click on Edit connection option from the menu.

-

Click on Export as context button from the window that appears.

-

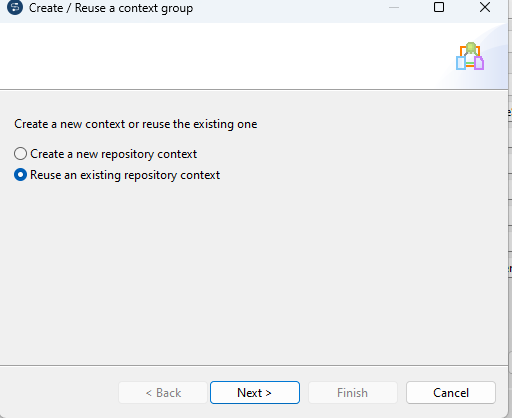

Click on Reuse an existing repository context.

-

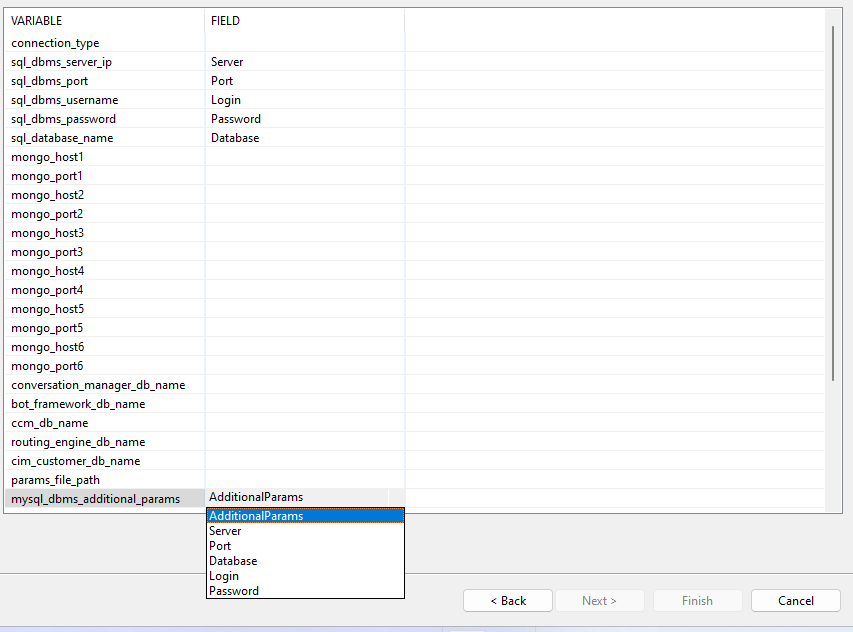

Select context_params file and assign the following fields to database connection parameters.

-

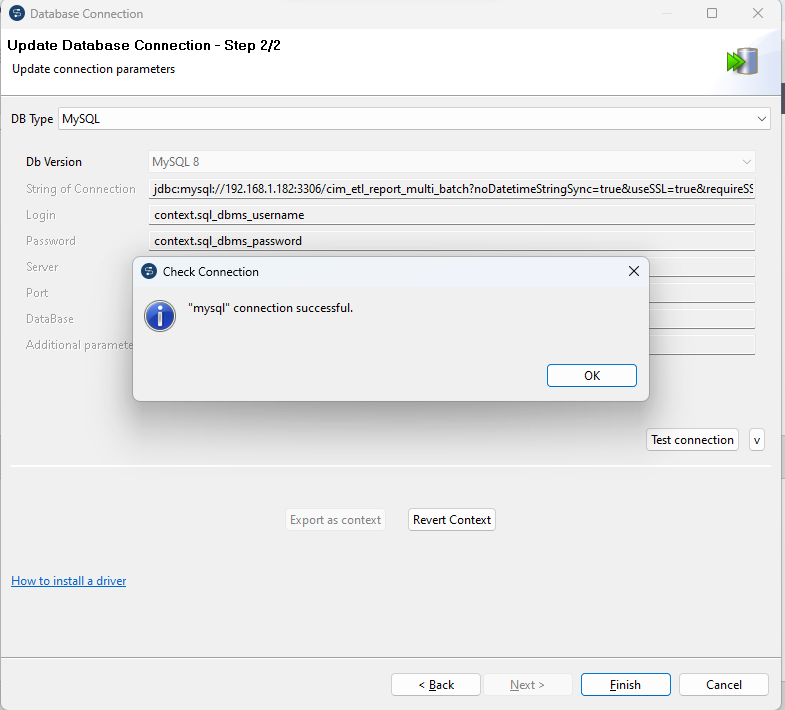

Click on test connection to verify MySQL connection.

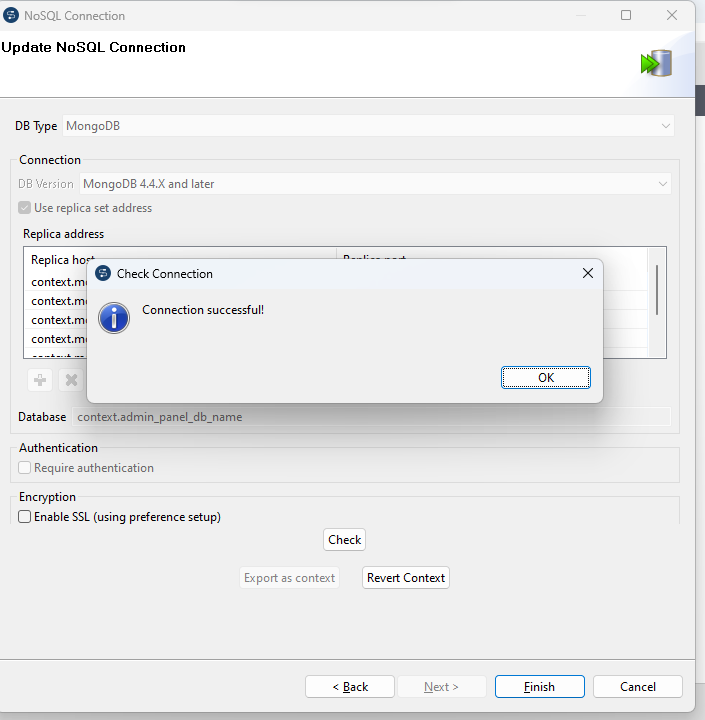

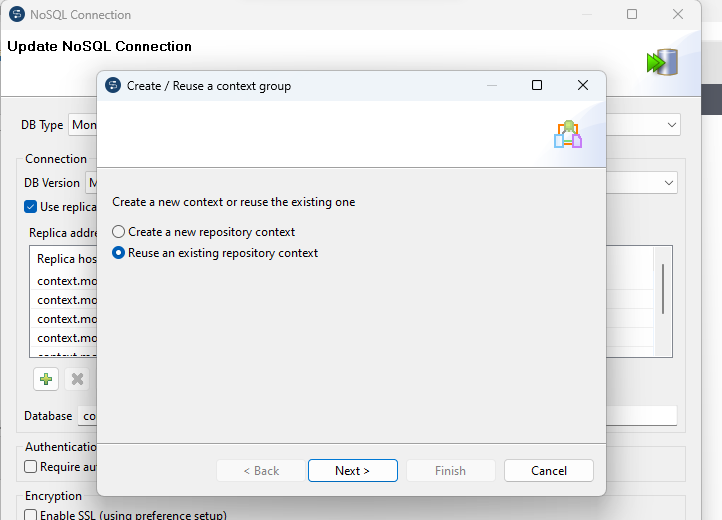

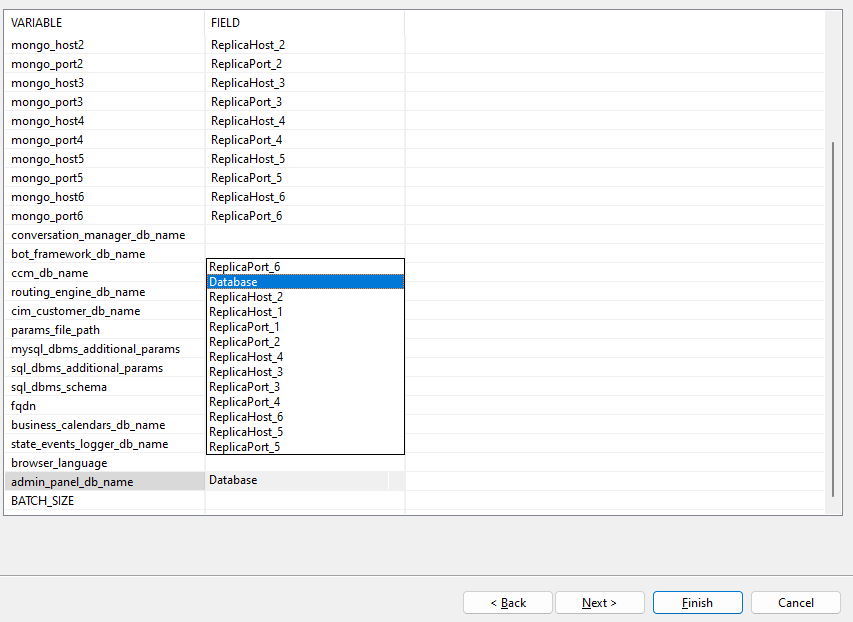

Database connection setup for NO-SQL( mongoDb )

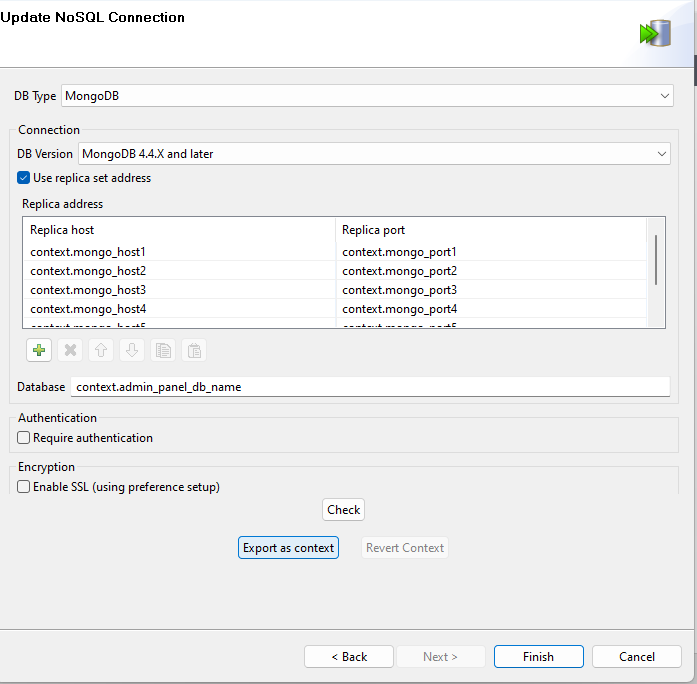

The similar steps should be performed for establishing connection with all the mongoDb databases one by one.

The ( database ) property must be carefully assign to the selected database.

-

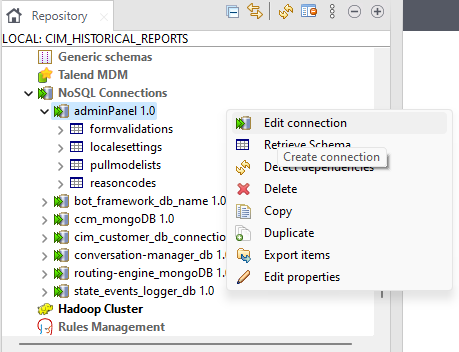

Toggle to Metadata ->NoSQL connections ->adminPanel 1.0 and click on Edit connection option from the menu.

-

Click on Export as context button from the window that appears.

-

Click on Reuse an existing repository context.

-

Select context_params file and assign the following fields to database connection parameters.

-

Click on test connection to verify MongoDb connection.