Solution Prerequisites

The following are the solution setup prerequisites.

Hardware requirements

For HA deployment, each machine in the cluster should have the following hardware specifications.

|

|

Minimum requirement |

|---|---|

|

CPU |

2 cores vCPU |

|

RAM |

4 GB |

|

Disk |

100 GB mounted on / |

|

NICs |

1 NIC |

Software Requirements

OS Compatibility

We require the customer/partner to install the following software on the server.

|

Item |

Version |

Notes |

|

CentOS Linux |

release 7.7.1908 (Core) |

Administrative privileges (root) are required to follow the deployment steps. |

|

Red Hat Enterprise Linux |

release 8.4 (Ootpa) |

Administrative privileges (root) are required to follow the deployment steps. |

Database Requirements

|

Item |

Notes |

|

MS SQL Server 2014, 2016 Express/ Standard/ Enterprise |

|

Docker Engine Requirements

|

Item |

Notes |

|

Docker CE 18+ |

|

|

Version 1.23.1 |

Browser Compatibility

|

Item |

Version |

Notes |

|

Chrome |

92.0.x |

|

|

Firefox |

Not tested |

|

|

IE

|

Not tested |

An on-demand testing cycle can be planned |

Cisco Unified CCE Compatibility

11.6 (Enhanced & Premium)

Installation Steps

The Internet should be available on the machine where the application is being installed and connections on port 9242 should be allowed in the network firewall to carry out the installation steps. All the commands start with a # indicating that root user privileges are required to execute these commands. Trailing # is not a part of the command.

Allow ports in the firewall

For internal communication of docker swarm, you'll need to allow the communication (both inbound and outbound) on the ports: 8083/tcp,8084/tcp , 8085/tcp, 8086/tcp, 8087/tcp, 8844/tcp, 8834/tcp, 8443/tcp and 443/tcp.

To start the firewall on CentOS (if it isn't started already), execute the following commands. You'll have to execute these commands on all the cluster machines.:

systemctl enable firewalld

systemctl start firewalld

To allow the ports on CentOS firewall, you can execute the following commands. You'll have to execute these commands on all the cluster machines.

firewall-cmd --add-port=8083/tcp --permanent

firewall-cmd --add-port=8084/tcp --permanent

firewall-cmd --add-port=8085/tcp --permanent

firewall-cmd --add-port=8086/tcp --permanent

firewall-cmd --add-port=8087/tcp --permanent

firewall-cmd --add-port=8844/tcp --permanent

firewall-cmd --add-port=8834/tcp --permanent

firewall-cmd --add-port=8443/tcp --permanent

firewall-cmd --add-port=443/tcp --permanent

firewall-cmd --reload

Configure Log Rotation

Add the following lines in /etc/docker/daemon.json file (create the file if not there already) and restart the docker daemon using systemctl restart docker. Perform this step on all the machines in the cluster in case of HA deployment.

{

"log-driver": "json-file",

"log-opts": {

"max-size": "50m",

"max-file": "3"

}

}

Creating Databases

Create a database for UMM and Supervisor Tools services in the MSSQL server with suitable names and follow the application installation steps.

Installing Application

-

Download the deployment script supervisor-tools-deployment.sh. This script will:

-

delete the supervisor-tools-deployment directory in the present working directory if it exists.

-

clone the supervisor-tools-deployment repository from GitLab in the present working directory.

-

-

To execute the script, give it the execute permissions and execute it.

chmod +x supervisor-tools-deployment.sh ./supervisor-tools-deployment.sh -

If the customer is not willing to put database password in environment variables file then follow these steps to configure Vault that will hold the database password

-

Update environment variables in the following files inside /

supervisor-tools-deployment/docker/environment_variablesfolder.-

Update UMM environment variables in the

umm-environment-variables.envfile. -

Update Supervisor Tools Environment variables in the

environment-variables.env. See also, Caller Lists Environment Variables to specify variables of the Caller Lists microservice.

-

-

Get domain/CA signed SSL certificates for SupervisorTools FQDN/CN and place the files in

/root/supervisor-tools-deployment/docker/certificatesfolder. The file names should beserver.crtandserver.key. -

Copy the supervisor-tools-deployment directory to the second machine for HA. Execute below command

scp -r supervisor-tools-deployment root@machine-ip:~/ -

Go to the second machine and update the environment variables where necessary.

-

Execute the following commands inside /root/supervisor-tools-deployment directory on both machines.

chmod 755 install.sh ./install.sh -

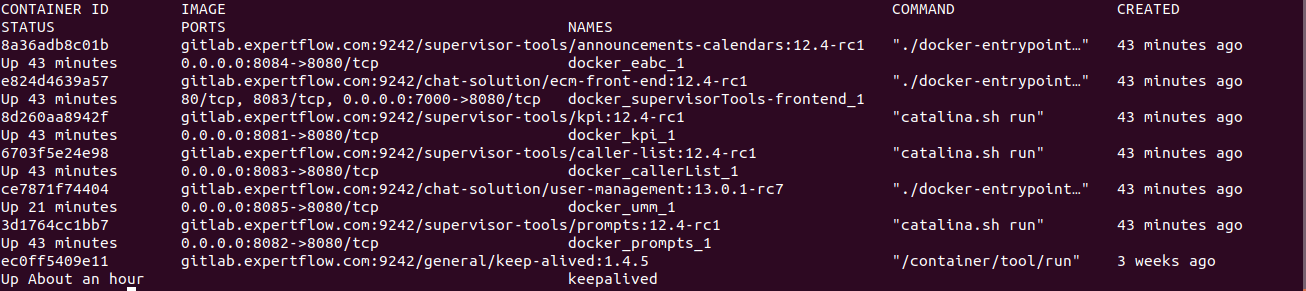

Run the following command to ensure that all the components are up and running. The screenshot below shows a sample response for a standalone non-HA deployment.

docker ps

Virtual IP configuration

Repeat the following steps for all the machines in the HA cluster.

-

Download keepalived.sh script and place it in

/rootthe directory. -

Give execute permission and execute the script:

chmod +x keepalived.sh ./keepalived.sh -

Configure keep.env file inside

/root/keep-alivedfolderName

Description

KEEPALIVED_UNICAST_PEERSPear machine IP.

192.168.1.80KEEPALIVED_VIRTUAL_IPSVirtual IP of the cluster. It should be available in the LAN. For example: 192.168.1.245

KEEPALIVED_PRIORITYPriority of the node. Instance with lower number will have a higher priority. It can take any value from 1-255.

KEEPALIVED_INTERFACEName of the network interface with which your machine is connected to the network. On CentOS,

ifconfigorip addr shwill show all the network interfaces and assigned addresses.CLEARANCE_TIMEOUTCorresponds to the initial startup time of the application in seconds which is being monitored by keepalived. A nominal value of 60-120 is good enough

KEEPALIVED_ROUTER_IDDo not change this value.

SCRIPT_VARThis script is continuously polled after 2 seconds. Keepalived relinquishes control if this shell script returns a non-zero response. It could be either umm or any backend microservice API.

pidof dockerd && wget -O index.htmlhttp://localhost:7575/ -

Update the SERVER_URL variable in environment variables to hold Virtual IP for front-end. Go to microservices table in UMM database, replace the name of tam, prompts and eabc microservices under ip_address column with Virtual IP. Change the ports to corresponding ports exposed in docker-compose file.

-

Give the execute permission and execute the script on both machine.

chmod +x keep-command.sh ./keep-command.sh

Adding License

-

Browse to http://<MACHINE_IP or FQDN>/umm in your browser (FQDN will be the domain name assigned to the IP/VIP).

-

Click on the red warning icon on right, paste the license in the field and click save.