Prerequisites

Software Requirements

|

Item |

Recommended |

Installation guide |

|

Operating System |

Debian 12 |

- |

|

FQDN mapped to server IP address |

- |

- |

Hardware Requirements

|

Item |

Minimum |

|---|---|

|

RAM |

16GB |

|

Disk space |

150GB |

|

CPU |

8 cores |

Port Utilization Requirements

The following ports must be open on the server for the voice connector to function.

|

FireWall Ports/Port range

|

Network Protocol |

Description |

|

5060:5091 |

udp |

Used for SIP signaling. |

|

5060:5091 |

tcp |

Used for SIP signaling. |

|

8021 |

tcp |

Media Server Event Socket |

|

16384:32768 |

udp |

Used for audio/video data in SIP, WSS, and other protocols |

|

7443 |

tcp |

Used for WebRTC |

|

8115 |

tcp |

Voice Connector API |

|

5432 |

tcp |

Postgresql Database |

|

3000 |

tcp |

Outbound Dialer API |

|

22 |

tcp |

SSH |

|

80 |

tcp |

HTTP |

|

443 |

tcp |

HTTPS |

|

1194 |

udp |

OpenVPN |

The ports can be opened as follows:

-

SSH into the Debian server.

-

Use command

ssh username@server-ip -

Enter user password.

-

Use command

su -

Enter root password

-

-

Run the following command:

-

sudo iptables -A INPUT -p PROTOCOL -m PROTOCOL --dport PORT -j ACCEPT -

Where PORT is the required Firewall port/port range and PROTOCOL is the associated Network Protocol.

-

-

Save this port configuration with command:

sudo iptables-save

Additional Firewall Rules

-

iptables -A INPUT -i lo -j ACCEPT -

iptables -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT -

iptables -A INPUT -p icmp --icmp-type echo-request -j ACCEPT -

iptables -P INPUT DROP -

iptables -P FORWARD DROP -

iptables -P OUTPUT ACCEPT

Setup Azure Speech Service

-

Create a Microsoft Azure Account.

-

Create an Azure Speech resource.

-

Create a billing account to use with the speech resource.

-

Note the Subscription Key, Region, and API Endpoint for this resource.

-

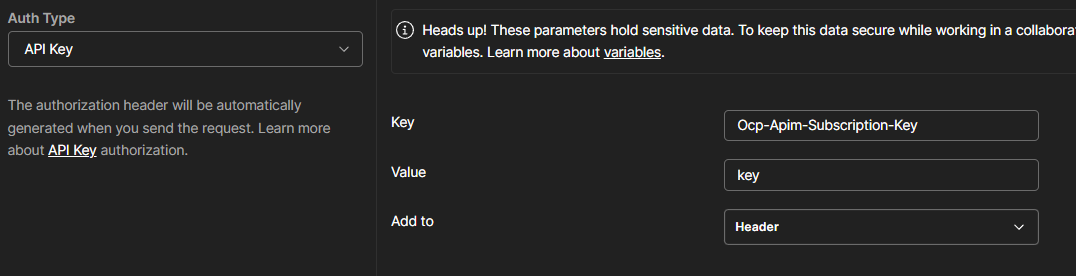

For the following APIs, use Authentication type API Key, with the Key name set to Ocp-Apim-Subscription-Key , Value set to the Subscription Key above, and the key added to the request headers.

-

Obtain a list of models with the endpoint using the following API:

-

GET <endpoint>/speechtotext/v3.2/models/base

-

-

Choose a model with properties.deprecationDates.transcriptionDateTime having a date not yet reached.

-

The language code for each model is specified in locale field.

-

The properties.features.supportsTranscriptions field must be True.

-

Example API response (shortened for brevity):

{ "self": "<endpoint>/speechtotext/v3.2/models/base/model-id", "properties": { "deprecationDates": { "adaptationDateTime": "2025-01-15T00:00:00Z", "transcriptionDateTime": "2026-01-15T00:00:00Z" }, "features": { "supportsTranscriptions": true, "supportsEndpoints": true, "supportsTranscriptionsOnSpeechContainers": false, "supportsAdaptationsWith": [ "Language", "Acoustic", "AudioFiles", "Pronunciation", "OutputFormatting" ], "supportedOutputFormats": [ "Display", "Lexical" ] }, }, "lastActionDateTime": "2024-01-18T15:05:45Z", "createdDateTime": "2024-01-18T15:04:17Z", "locale": "en-US", "description": "en-US base model" } -

Note the self field of that model.

-

Use this model’s self and locale values to create a new speech endpoint using the following API:

-

POST <endpoint>/speechtotext/v3.2-preview.2/endpoints

-

{ "model": { "self": "<model-self>" }, "properties": { "loggingEnabled": true }, "locale": "<model-locale>", "displayName": "My New Speech Endpoint", "description": "This is a speech endpoint" }

-

-

The response will contain a list of socket/REST endpoint usable for speech transcription. Pick the links.webSocketConversation field.

{ "values": [ { "self": "https://northeurope.api.cognitive.microsoft.com/speechtotext/v3.2-preview.2/endpoints/e8e25604-a868-454e-9553-37e5cdb0bb7f", "model": { "self": "https://northeurope.api.cognitive.microsoft.com/speechtotext/v3.2-preview.2/models/base/ca751a0b-4b8c-4a80-baa7-ad1a6388488e" }, "links": { "logs": "https://northeurope.api.cognitive.microsoft.com/speechtotext/v3.2-preview.2/endpoints/e8e25604-a868-454e-9553-37e5cdb0bb7f/files/logs", "restInteractive": "https://northeurope.stt.speech.microsoft.com/speech/recognition/interactive/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f", "restConversation": "https://northeurope.stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f", "restDictation": "https://northeurope.stt.speech.microsoft.com/speech/recognition/dictation/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f", "webSocketInteractive": "wss://northeurope.stt.speech.microsoft.com/speech/recognition/interactive/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f", "webSocketConversation": "wss://northeurope.stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f", "webSocketDictation": "wss://northeurope.stt.speech.microsoft.com/speech/recognition/dictation/cognitiveservices/v1?cid=e8e25604-a868-454e-9553-37e5cdb0bb7f" }, "lastActionDateTime": "2024-07-08T06:37:37Z", "createdDateTime": "2024-07-08T06:37:03Z", "locale": "en-US", "displayName": "My New Speech Endpoint", "description": "This is a speech endpoint" } ] } -

After these steps we now have the Subscription Key, Azure speech service region and the Azure Speech Service socket endpoint. These will be used in the Media Server configuration below.

Install Media Server

-

SSH into the Debian server onto which the Media Server will be deployed.

-

Use command

ssh username@server-ip -

Enter user password.

-

Use command

su -

Enter root password.

-

-

Run commands:

-

sudo apt update sudo apt install -y lua-sec certbot lua-socket lua-json lua-dkjson apt install -y git git clone -b azure_transcribe https://efcx:RecRpsuH34yqp56YRFUb@gitlab.expertflow.com/rtc/media-server-setup.git "/usr/src/fusionpbx-install.sh" chmod -R 777 /usr/src/fusionpbx-install.sh cd /usr/src/fusionpbx-install.sh/debian && ./install.sh

-

-

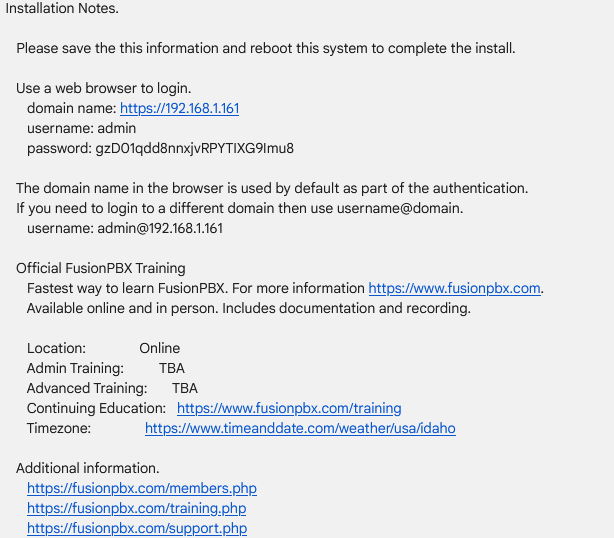

Once the installation has finished, some information will be shown as below:

-

In a web browser, open the domain name URL and use the provided username and password to log on.

-

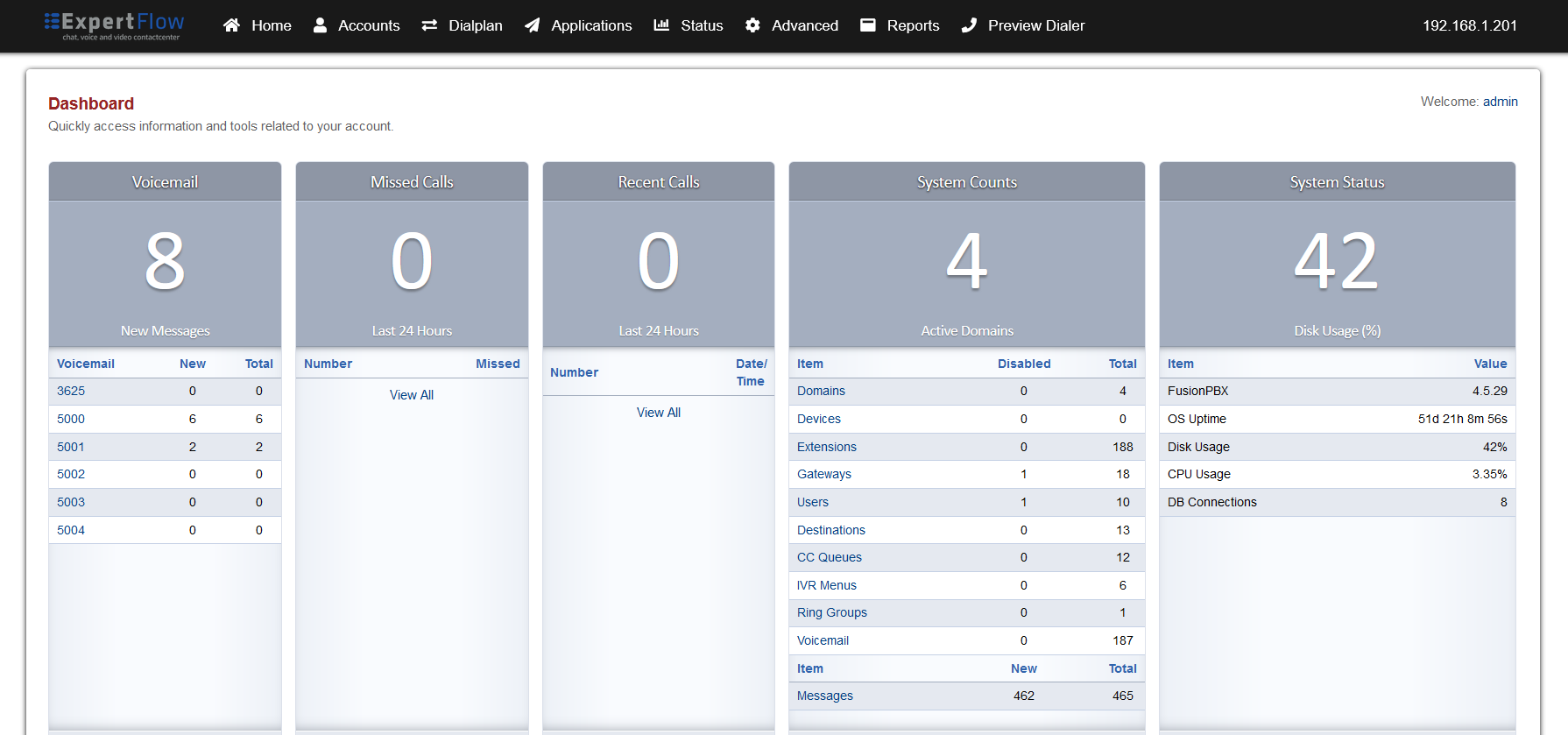

A screen like below should open for a successful installation:

-

-

If the page does not open, then go to the command line and run

systemctl stop apache2 systemctl restart nginx -

Try opening the page in Step 3b again, and if it does not open, reset the server and start the installation again.

-

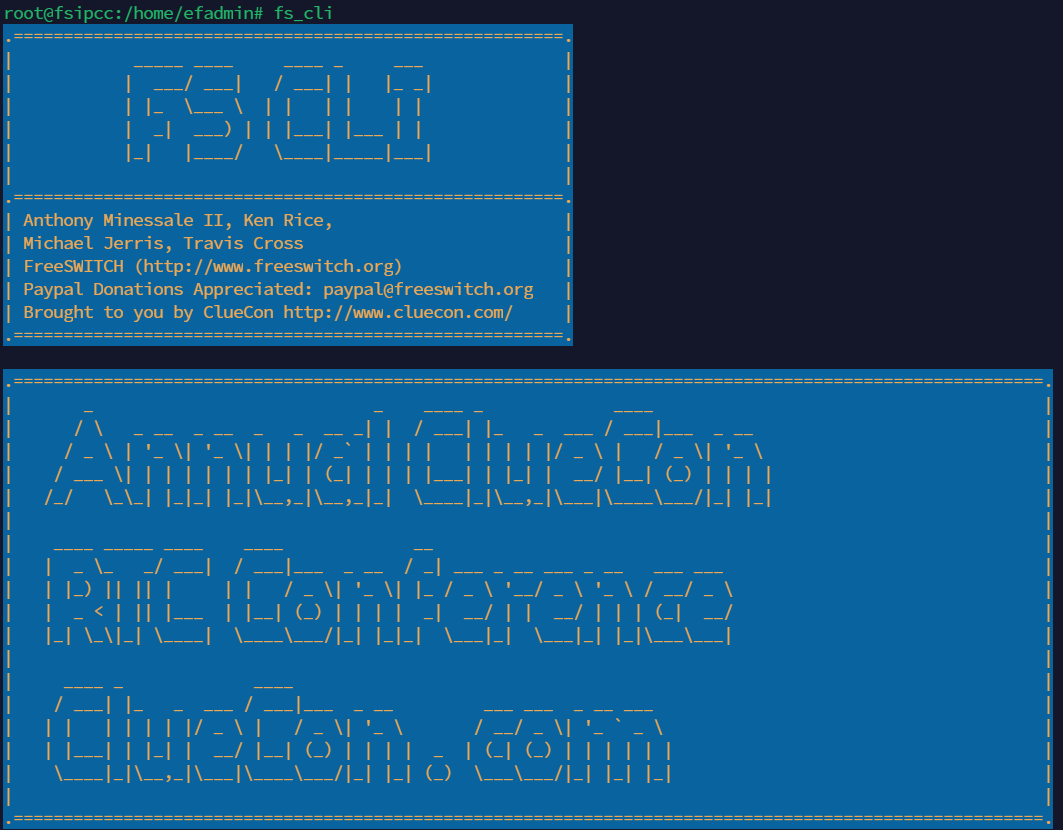

In the command line, use the command to access the Freeswitch command line as shown below:

fs_cli

Configure Global Transcription

-

Login to Media Server web interface.

-

Open in browser: https://IP-addr, where IP-addr is the IP address of the Media Server.

-

-

Add the username and password that was shown upon installation and press LOGIN.

-

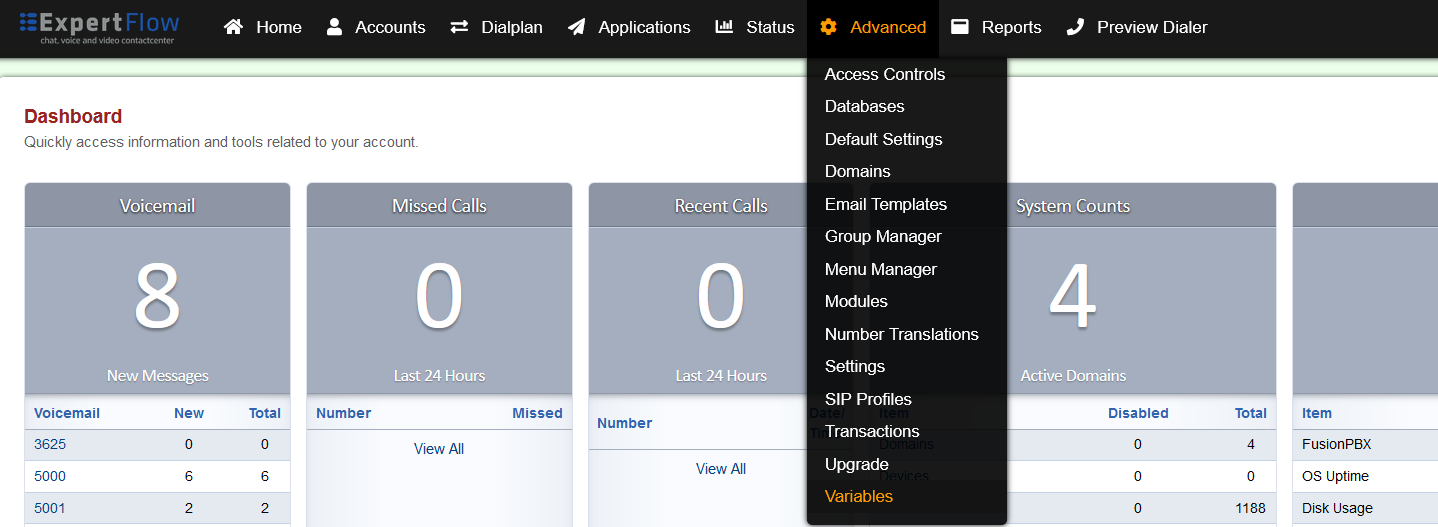

Select the Variables option from the Advanced tab.

-

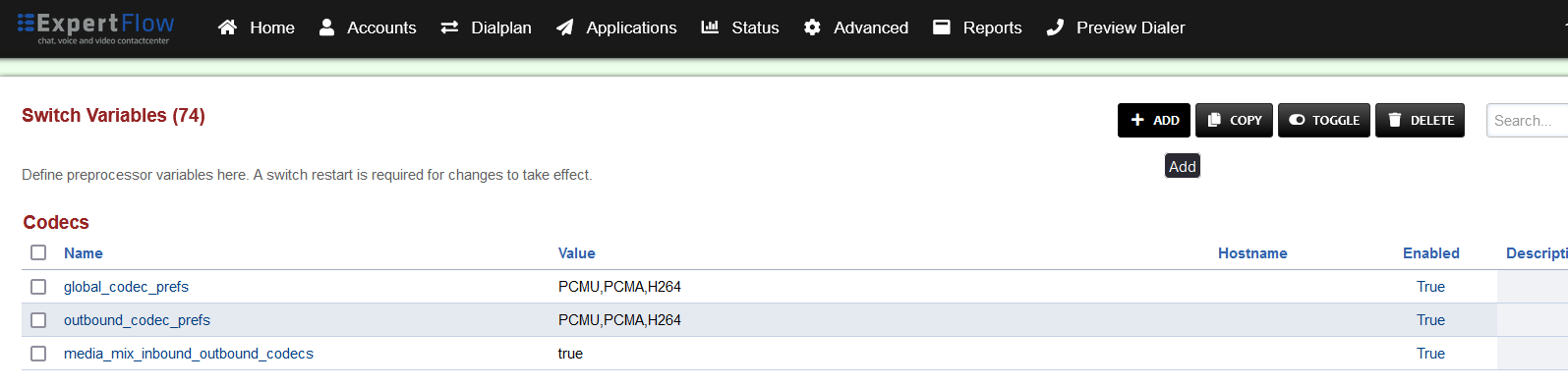

Press ADD on the top right.

-

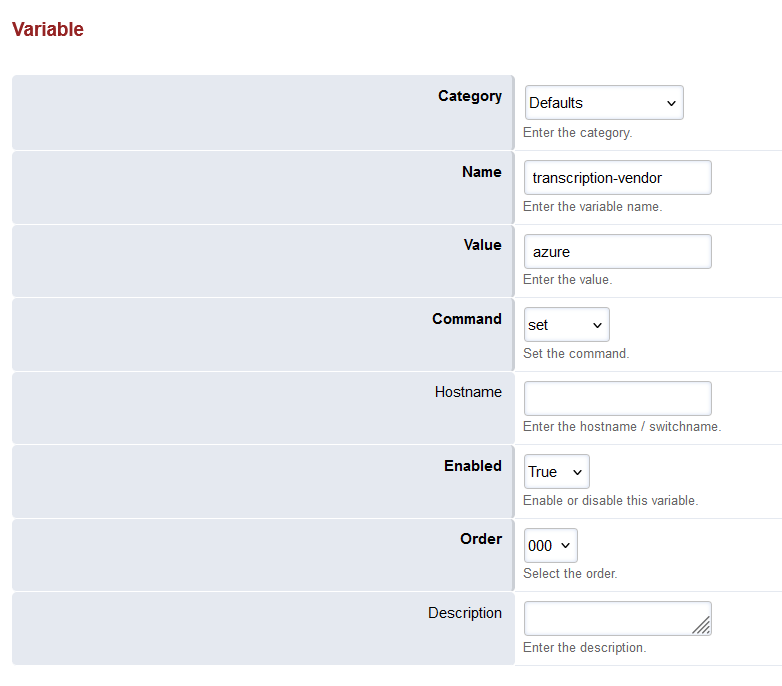

Set the Category to Defaults, Name to transcription-vendor and Value to azure.

-

Save the changes by pressing SAVE button in top right corner.

-

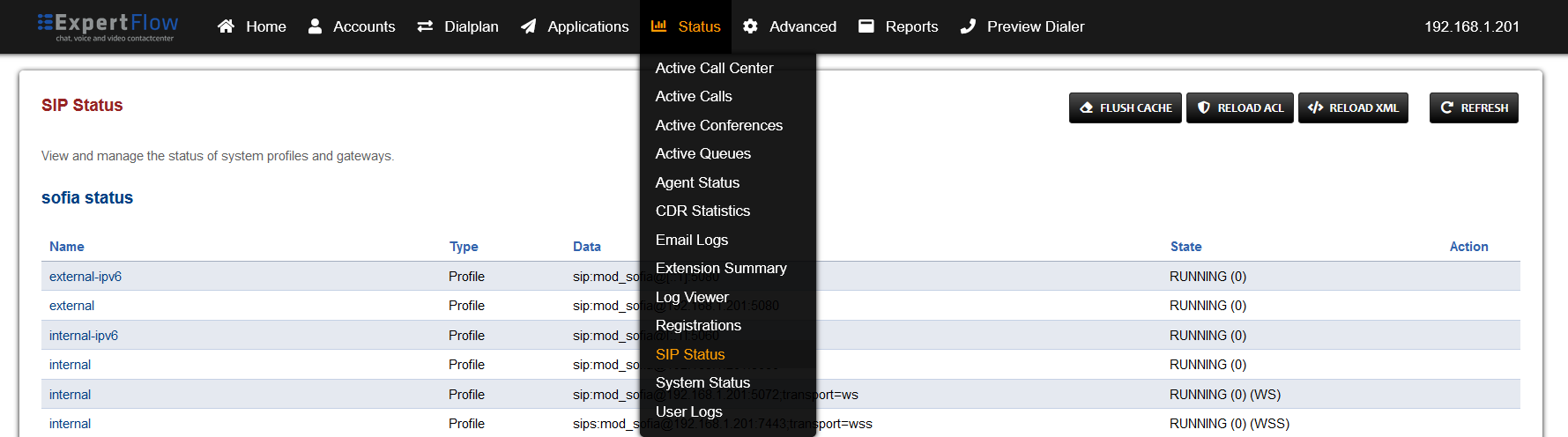

Open SIP Status under the Status tab.

-

Press the RELOAD XML button at the top right.

-

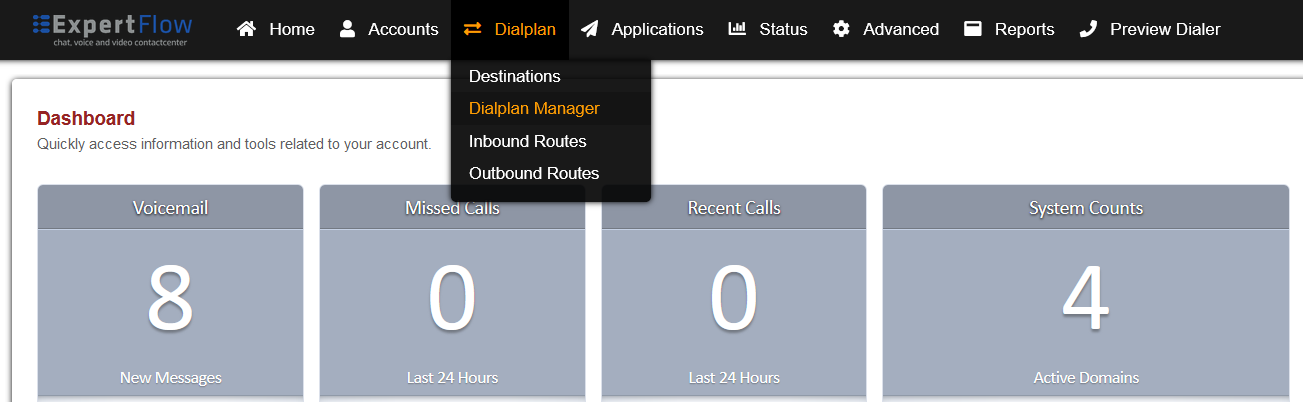

Open the Dialplan Manager section under the Dialplan tab.

-

Add a new Dialplan by pressing the ADD Button on the top.

-

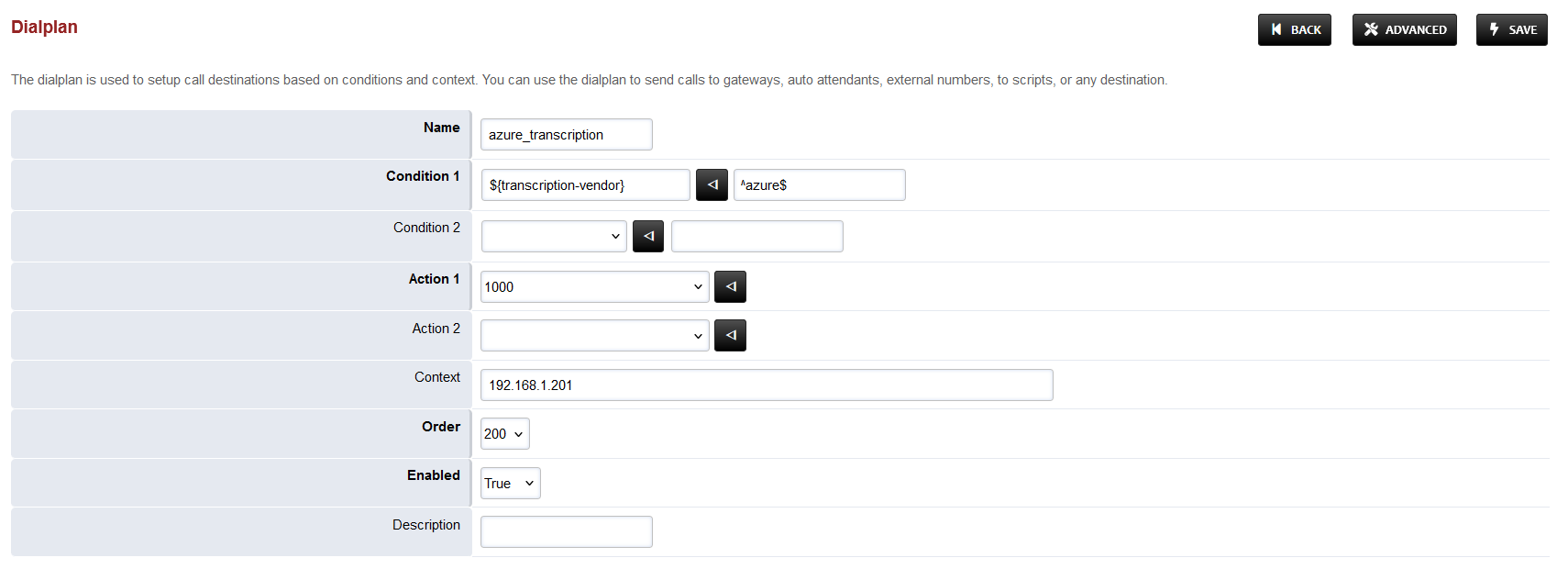

Fill the form with following details :

-

Name = azure_transcription

-

Condition 1 = Select destination_number from list and add a random number, then click to edit and paste ${transcription-vendor}

-

For the field to the right, add ^azure$

-

-

Action 1 = Select first item from the list

-

-

Save the form by pressing save button on top right Corner.

-

Re-open azure_transcription dialplan.

-

Set the Continue field to True.

-

Set the order field to 889.

-

Set the Context field to global.

-

Set the Domain field to Global.

-

Delete the second row by checking the box in the Delete column for the second row and pressing SAVE in the top right.

-

Add the following information to this dialplan:

|

Tag |

Type |

Data |

Group |

Order |

Enabled |

|---|---|---|---|---|---|

|

action |

set |

START_RECOGNIZING_ON_VAD=true |

0 |

10 |

true |

|

action |

export |

START_RECOGNIZING_ON_VAD=true |

0 |

15 |

true |

|

action |

set |

AZURE_SUBSCRIPTION_KEY=<key> |

0 |

20 |

true |

|

action |

export |

AZURE_SUBSCRIPTION_KEY=<key> |

0 |

25 |

true |

|

action |

set |

AZURE_SERVICE_ENDPOINT=<endpoint> |

0 |

30 |

true |

|

action |

export |

AZURE_SERVICE_ENDPOINT=<endpoint> |

0 |

35 |

true |

|

action |

set |

AZURE_REGION=<region> |

0 |

40 |

true |

|

action |

export |

AZURE_REGION=<region> |

0 |

45 |

true |

|

action |

export |

nolocal:execute_on_answer=lua start_transcribe.lua azure <language> ${uuid} |

0 |

50 |

true |

-

The values of <key>, <endpoint> and <region> can be obtained from the Setup Azure Speech Service section above.

-

For <language> a language tag can be chosen from this list, based on the Azure Speech model used e.g. en-US.

-

Save the changes by pressing SAVE button in top right corner.