The purpose of this document is to describe steps to deploy an RKE2 Kubernetes cluster in High Availability using Kube-VIP.

Prerequisites

The prerequisites and cluster topologies are describe in the Singe Node Deployment. Please review the document before proceeding with installation in High Availability mode.

|

|

Node Required |

vCPU |

vRAM |

vDisk (GiB) |

Comments |

|---|---|---|---|---|---|

|

RKE2 |

3 Control Plane nodes |

2 |

4 |

50 |

See RKE2 installation requirements for hardware sizing, the underlying operating system, and the networking requirements. |

|

CX-Core |

2 Worker nodes |

2 |

4 |

250 |

If Cloud Native Storage is not available, then 2 worker nodes are required on both site-A and site-B. However, if CloudNative Storage is accessible from both sites, 1 worker node can sustain workload on each site. |

|

Superset |

1 Worker node |

2 |

8 |

250 |

For reporting |

Preparing for Deployment

Kube-VIP Requirements

A VIP is a virtual IP Address that remains available and traverses between all the Control-Plane nodes seamlessly with 1 Control-Plane node active to Kube-VIP. Kube-VIP works exactly as keepalive except that it has some additional flexibilities to configure depending upon the environment for example Kube-VIP can work using

-

ARP – When using ARP or Layer 2 it will use leader election.

Other modes that can also be used such as BGP, Routing Table and Wireguard

-

In ARP mode same subnet VIP for all the control plane nodes is required

-

Kube-VIP deployment is dependent on the atleast one working RKE2 Control Plane node before we can deploy other nodes ( both CP and Workers ) .

Installation Steps

Step 1: Prepare First Control Plane

-

<FQDN> is the Kube-VIP FQDN

This step is required for the Nginx Ingress controller to allow customized configurations.

Step 1. Create Manifests

-

Create necessary directories for RKE2 deployment

mkdir -p /etc/rancher/rke2/

mkdir -p /var/lib/rancher/rke2/server/manifests/

-

Generate the ingress-nginx controller config file so that the RKE2 server bootstraps it accordingly.

cat<<EOF| tee /var/lib/rancher/rke2/server/manifests/rke2-ingress-nginx-config.yaml

---

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-ingress-nginx

namespace: kube-system

spec:

valuesContent: |-

controller:

metrics:

service:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "10254"

config:

use-forwarded-headers: "true"

allowSnippetAnnotations: "true"

EOF

-

Create deployment manifest called

config.yaml for RKE2 Cluster and replace the IP addresses and corresponding FQDNS according.( add any other fields from the Extra Options sections in config.yaml at this point ). If you deploying worker HA, uncomment to disable rke2 ingress.

cat<<EOF|tee /etc/rancher/rke2/config.yaml

#Uncomment for Control-Plane HA tls-san and its kid entry <FQDN>

#tls-san:

# - <FQDN>

write-kubeconfig-mode: "0644"

etcd-expose-metrics: true

etcd-snapshot-schedule-cron: "0 */6 * * *"

# Keep 56 etcd snapshorts (equals to 2 weeks with 6 a day)

etcd-snapshot-retention: 56

cni:

- canal

#Uncomment for Worker HA Deployment

#disable:

# - rke2-ingress-nginx

EOF

In above mentioned template manifest,

-

<FQDN> must be pointing towards the first control plane

Step 2. Download the RKE2 binaries and start Installation

Following are some defaults that RKE2 uses while installing RKE2. You may change the following defaults as needed by specifying the switches mentioned.

|

|

Switch |

Default |

Description |

|---|---|---|---|

|

To change the default deployment directory of RKE2 |

|

|

Important Note: Moving the default destination folder to another location is not recommended. However, if there is need for storing the containers in different partition, it is recommended to deploy the containerd separately and change its destination to the partition where you have space available using |

|

Default POD IP Assignment Range |

|

|

IPv4/IPv6 network CIDRs to use for pod IPs |

|

Default Service IP Assignment Range |

|

|

IPv4/IPv6 network CIDRs to use for service IPs |

cluster-cidr and service-cidr are independently evaluated. Decide wisely well before the the cluster deployment. This option is not configurable once the cluster is deployed and workload is running.

-

Run the following command to install RKE2.

curl -sfL https://get.rke2.io |INSTALL_RKE2_TYPE=server sh -

-

Enable the rke2-server service

systemctl enable rke2-server.service

-

Start the service

systemctl start rke2-server.service

RKE2 server requires 10-15 minutes (at least) to bootstrap completely You can check the status of the RKE2 Server using systemctl status rke2-server; Only procced once everything is up and running or configurational issues might occur requiring redo of all the installation steps.

Step 3. Kubectl Profile setup

By default, RKE2 deploys all the binaries in

/var/lib/rancher/rke2/bin path. Add this path to the system's default PATH for kubectl utility to work appropriately.

echo "export PATH=$PATH:/var/lib/rancher/rke2/bin" >> $HOME/.bashrc

echo "export KUBECONFIG=/etc/rancher/rke2/rke2.yaml" >> $HOME/.bashrc

source ~/.bashrc

Step 4. Bash Completion for kubectl

-

Install bash-completion package

apt install bash-completion -y

-

Set-up autocomplete in bash into the current shell, Also, add alias for short notation of kubectl

kubectl completion bash > /etc/bash_completion.d/kubectl

echo "alias k=kubectl" >> ~/.bashrc

echo "complete -o default -F __start_kubectl k" >> ~/.bashrc

source ~/.bashrc

Step 5. Install helm

-

Helm is a super tool to deploy external components. In order to install helm on cluster, execute the following command:

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3|bash

Step 6. Enable bash completion for helm

-

Generate the scripts for help bash completion

helm completion bash > /etc/bash_completion.d/helm

create link for crictl to work properly.

ln -s /var/lib/rancher/rke2/agent/etc/crictl.yaml /etc/crictl.yaml

Step 4: Deploy Kube-VIP

1. Decide the IP and the interface on all nodes for Kube-VIP and setup these as environment variables. This step must be completed before deploying any other node in the cluster (both CP and Workers).

export VIP=<FQDN>

export INTERFACE=<Interface>

2. Import the RBAC manifest for Kube-VIP

curl https://kube-vip.io/manifests/rbac.yaml > /var/lib/rancher/rke2/server/manifests/kube-vip-rbac.yaml

3. Fetch the kube-vip image

/var/lib/rancher/rke2/bin/crictl -r "unix:///run/k3s/containerd/containerd.sock" pull ghcr.io/kube-vip/kube-vip:latest

4. Deploy the Kube-VIP

CONTAINERD_ADDRESS=/run/k3s/containerd/containerd.sock ctr -n k8s.io run \

--rm \

--net-host \

ghcr.io/kube-vip/kube-vip:latest vip /kube-vip manifest daemonset --arp --interface $INTERFACE --address $VIP --controlplane --leaderElection --taint --services --inCluster | tee /var/lib/rancher/rke2/server/manifests/kube-vip.yaml

5. Wait for the kube-vip to complete bootstrapping

kubectl rollout status daemonset kube-vip-ds -n kube-system --timeout=650s

6. Once the condition is met, you can check the daemonset by kube-vip is running 1 pod

kubectl get ds -n kube-system kube-vip-ds

Once the cluster has more control-plane nodes added, the count will be equal to the total number of CP nodes.

Step 5: Remaining Control-Plane Nodes

Perform these steps on remaining control-plane nodes.

1. Create required directories for RKE2 configurations.

mkdir -p /etc/rancher/rke2/

mkdir -p /var/lib/rancher/rke2/server/manifests/

2. Create a deployment manifest called config.yaml for RKE2 Cluster and replace the IP addresses and corresponding FQDNS according (add any other fields from the Extra Options sections in config.yaml at this point).

cat<<EOF|tee /etc/rancher/rke2/config.yaml

server: https://<FQDN>:9345

token: [token from /var/lib/rancher/rke2/server/node-token on server node 1]

write-kubeconfig-mode: "0644"

tls-san:

- <FQDN>

write-kubeconfig-mode: "0644"

etcd-expose-metrics: true

cni:

- canal

EOF

In above mentioned template manifest,

-

<FQDN> is the Kube-VIP FQDN

Ingress-Nginx config for RKE2

By default RKE-2 based ingress controller doesn't allow additional snippet information in ingress manifests, create this config before starting the deployment of RKE2

cat<<EOF| tee /var/lib/rancher/rke2/server/manifests/rke2-ingress-nginx-config.yaml

---

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-ingress-nginx

namespace: kube-system

spec:

valuesContent: |-

controller:

metrics:

service:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "10254"

config:

use-forwarded-headers: "true"

allowSnippetAnnotations: "true"

EOF

Step 6: Begin the RKE2 Deployment

1. Begin the RKE2 Deployment

curl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE=server sh -

2. Start the RKE2 service. Starting the Service will take approx. 10-15 minutes based on the network connection

systemctl start rke2-server

3. Enable the RKE2 Service

systemctl enable rke2-server

4. By default, RKE2 deploys all the binaries in /var/lib/rancher/rke2/bin path. Add this path to system's default PATH for kubectl utility to work appropriately.

export PATH=$PATH:/var/lib/rancher/rke2/bin

export KUBECONFIG=/etc/rancher/rke2/rke2.yaml

5. Also, append these lines into current user's .bashrc file

echo "export PATH=$PATH:/var/lib/rancher/rke2/bin" >> $HOME/.bashrc

echo "export KUBECONFIG=/etc/rancher/rke2/rke2.yaml" >> $HOME/.bashrc

Step 7: Deploy Worker Nodes

Follow the Deployment Prerequisites from RKE2 Control plane Deployment for each worker node before deployment i.e disable firewall on all worker nodes.

On each worker node,

-

Run the following command to install RKE2 agent on the worker.

Bashcurl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE="agent" sh - -

Enable the

rke2-agentservice by using the following command.Bashsystemctl enable rke2-agent.service -

Create a directory by running the following commands.

Bashmkdir -p /etc/rancher/rke2/ -

Add/edit

/etc/rancher/rke2/config.yamland update the following fields.-

<Control-Plane-IP>This is the IP for the control-plane node. -

<Control-Plane-TOKEN>This is the token which can be extracted from first control-plane by runningcat /var/lib/rancher/rke2/server/node-tokenBashserver: https://<Control-Plane-IP>:9345 token: <Control-Plane-TOKEN>

-

-

Start the service by using follow command.

Bashsystemctl start rke2-agent.service

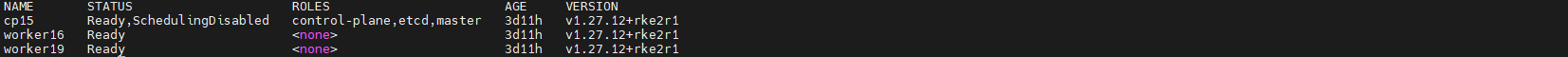

Step 8: Verify

On the control-plane node run the following command to verify that the worker(s) have been added.

kubectl get nodes -o wide

Sample output:-

-

Choose storage - See Storage Solution - Getting Started