This document explains how to deploy Expertflow CX using DigitalOcean Managed Kubernetes service.

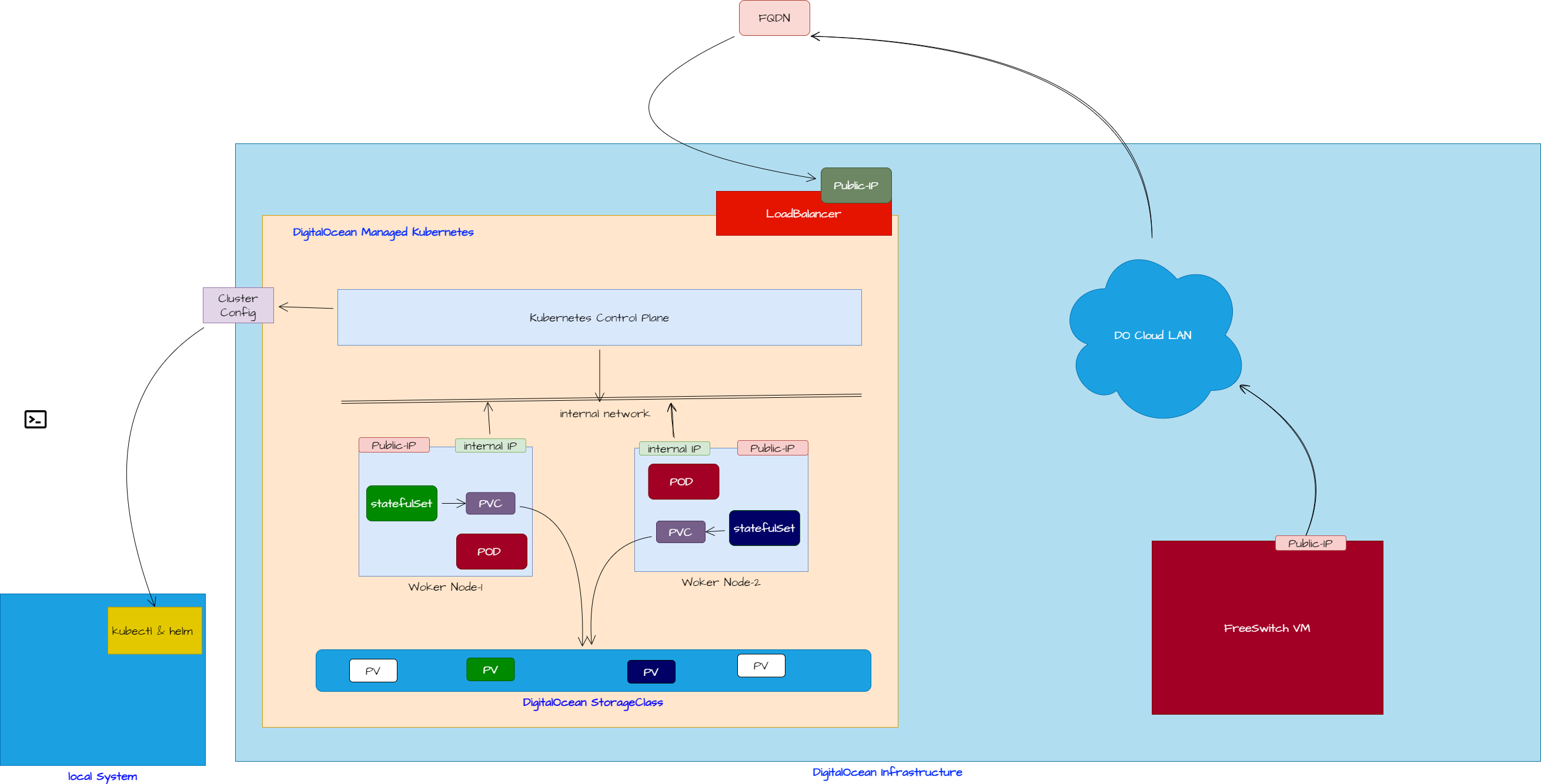

When we use DigitalOceann (DO) Kubernetes, the cluster is managed by the DO. It means that we don't not have to worry about the Control Plane deployment. Customer is required to select relevant size of Worker nodes which are suitable for the workload type. The storage class and the network load balancer are provisioned automatically. Once the cluster is in Running state, its ready to deploy the workload on top of it.

Important points to remember are:

-

No Control Plane Nodes required.

-

built-in type of cloud native storage class available

-

Network load balancer available to instantly launch the services.

Technical Overview

The following diagram explains the architecture of the DigitalOcean (DO) Managed Kubernetes. It describes.....

/*<![CDATA[*/ div.rbtoc1771915564310 {padding: 0px;} div.rbtoc1771915564310 ul {list-style: disc;margin-left: 0px;} div.rbtoc1771915564310 li {margin-left: 0px;padding-left: 0px;} /*]]>*/ Technical Overview EF-CX Deployment on DO Kubernetes Engine. Requirements: Login to Cluster Security Concerns Exceptions Step 2: Default StorageClass Step 4: Deploy the EF-CX Solution Step 5: SSL Certificates. 1. Self-Signed or Commercial SSL Certificates 2. LetsEncrypt SSL Certificate Pre-requisites LetsEncrypt NGINX Service Enhancement for DO Kubernetes Engine 3. Create LE based SSL Certificate Step 6: Deploy Ingress Routes LET'SENCRYPTSSL 5. Access your solution at https://{{FQND}}/ which should present the unified-admin page . Step 7: Freeswitch Deployment References

EF-CX Deployment on DO Kubernetes Engine.

Requirements:

A local system ( Windows or Linux ) with access to the internet will be required for remotely managing the kubernetes cluster and other VMs. This is where you will be required to clone and modify everything , but it will implemented on your DO based kubernetes cluster.

Step1: Create DO Kubernetes Cluster

-

Login to the DO portal and create a kubernetes cluster with 2 worker nodes ( 8GB ,4vCPU ) in your preferred availability zone ( e.g SF02 ). It approximately takes 10-15 minutes for creating a new cluster.

-

Once the cluster is created, you will have to download the

<cluster-name>-kubeconfig.yamlfile for the same cluster. -

Download the kubeconfig.yaml file to your local system so that it can be used to interact with the newly created cluster.

Login to Cluster

1. Download kubectl and helm for your system. You can follow steps mentioned here for kubectl and here to install helm.

If you already have these utilities already installed on your local console system, then proceed to step 2.

2. Save the kubeconfig.yaml file created earlier and set environment variable to its absolute path

export KUBECONFIG=/path/to/download/kubeconfig.yaml

3. Run the command at your terminal to list the nodes:

kubectl get nodes -o wide

The output looks like this:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

resident-ygu6e Ready <none> 29h v1.25.12 10.120.0.2 134.209.48.14 Debian GNU/Linux 11 (bullseye) 5.18.0-0.deb11.4-amd64 containerd://1.4.13

resident-ygu6g Ready <none> 29h v1.25.12 10.120.0.3 134.209.48.240 Debian GNU/Linux 11 (bullseye) 5.18.0-0.deb11.4-amd64 containerd://1.4.13

If the command returns a list of nodes with their corresponding details, you are successfully connected to the DO kubernetes cluster. However, if this is not the case, please talk to Expertflow CX Support Personnel for further guidance.

Limitations

-

DigitalOcean managed Kubernetes cluster doesn't allow you to directly access your worker Nodes using SSH or any other mechanism. this means you can't access or change anything on the worker nodes directly.

-

to access the nodePort based services, use the IP of any worker Node. please do not use FQDN. The FQDN is solely used for the ingress purposes or any other services that you configure of the type LoadBalancer.

Security Concerns

Any kubernetes cluster with production deployments exposed to general public, must be secured to avoid any threat provocations by the hackers. to avoid such scenarios, consider

-

limited approach to nodePorts

-

if nodePorts are still required, consider networkPolicy implementations or

-

restricting the access to your nodePort services to only a limited set of IP ranges . this link and link can give more details about exposing services on nodePorts.

-

change default and easy to guess user/password pairs so that the brute force attackers find it hard to decode them.

-

always use complex passwords with at least 2-4 alphanumeric, lower-upper case and digits. Expertflow123 is easy to type, but easy to guess whereas Exp3r$f(0#123 might take longer enough to break.

-

implement rate-limits on your public services for DDOS preventions. see here for details.

-

implementation of OWASP and similar standards to protect data integrity

-

Backups are completely preserved in an isolated site. This link provides details on backups management.

Exceptions

The DO managed kubernetes is fully compatible with on-prem based RKE2 or K3s based deployment but some exceptions should be handled due to its managed and cloud-native nature.

Step 2: Default StorageClass

By default, DO Kubernetes provisions 4 different type of storageClass definitions to manage with one of these selected as the default. We will change the default class to a preferred one with the XFS as file-system type. This is required as most of the components in ef-external namespace are required to have XFS based volumes.

1. Get the default list of storageClass available:

root@auto:~# k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

do-block-storage (default) dobs.csi.digitalocean.com Delete Immediate true 24h

do-block-storage-retain dobs.csi.digitalocean.com Retain Immediate true 24h

do-block-storage-xfs dobs.csi.digitalocean.com Delete Immediate true 24h

do-block-storage-xfs-retain dobs.csi.digitalocean.com Retain Immediate true 24h

root@auto:~#

2. We will have to disable the current default do-block-storage and make the do-block-storage-xfs-retain as default. Disable default flag on the do-block-storage:

kubectl patch storageclass do-block-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}'

3. Enable the do-block-storage-xfs-retain as default storage class by running the following command:

kubectl patch storageclass do-block-storage-xfs-retain -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

4. After these operations list of storage class will be like below:

# k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

do-block-storage dobs.csi.digitalocean.com Delete Immediate true 24h

do-block-storage-retain dobs.csi.digitalocean.com Retain Immediate true 24h

do-block-storage-xfs dobs.csi.digitalocean.com Delete Immediate true 24h

do-block-storage-xfs-retain (default) dobs.csi.digitalocean.com Retain Immediate true 24h

root@auto:~/DO/cim-solution/kubernetes#

Step 3: Install the Nginx Ingress controller

On DO managed kubernetes, there is no default ingress controller available( as compared to on-premise RKE2 or K3s ). Nginx based ingress controller is required for the EF-CX solution to work.

1. Add the helm repo:

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

2. Create a file on your local system with below given contents (ingress-nginx-overrides.yaml):

## Starter Kit nginx configuration

## Ref: https://github.com/kubernetes/ingress-nginx/tree/helm-chart-4.0.13/charts/ingress-nginx

##

controller:

replicaCount: 2

resources:

requests:

cpu: 100m

memory: 90Mi

service:

type: LoadBalancer

annotations:

# # Enable proxy protocol

service.beta.kubernetes.io/do-loadbalancer-enable-proxy-protocol: "true"

# # Specify whether the DigitalOcean Load Balancer should pass encrypted data to backend droplets

# service.beta.kubernetes.io/do-loadbalancer-tls-passthrough: "true"

# # You can keep your existing LB when migrating to a new DOKS cluster, or when reinstalling AES

# kubernetes.digitalocean.com/load-balancer-id: "<YOUR_DO_LB_ID_HERE>"

# service.kubernetes.io/do-loadbalancer-disown: false

## Will add custom configuration options to Nginx https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/

config:

use-proxy-protocol: "true"

keep-alive-requests: "10000"

upstream-keepalive-requests: "1000"

worker-processes: "auto"

max-worker-connections: "65535"

use-gzip: "true"

# # Enable the metrics of the NGINX Ingress controller https://kubernetes.github.io/ingress-nginx/user-guide/monitoring/

# metrics:

# enabled: true

# podAnnotations:

# controller:

# metrics:

# service:

# servicePort: "9090"

# prometheus.io/port: "10254"

# prometheus.io/scrape: "true"

3. Install the nginx ingress controller using:

helm install ingress-nginx ingress-nginx/ingress-nginx --version "4.7.1" --namespace ingress-nginx --create-namespace -f ingress-nginx-overrides.yaml

4. Check the status of all the components in ingress-nginx namespace:

# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-controller-7687f9d45-bw5fm 1/1 Running 0 24h

pod/ingress-nginx-controller-7687f9d45-gk4cr 1/1 Running 0 24h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.245.121.151 134.209.140.189 80:31070/TCP,443:31950/TCP 24h

service/ingress-nginx-controller-admission ClusterIP 10.245.73.141 <none> 443/TCP 24h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 2/2 2 2 24h

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-7687f9d45 2 2 2 24h

5. Extract the publicly accessible IP address assigned to your NGINX ingress controller. This IP will be used by the FQDN to route the EF-CX traffic.

kubectl get svc -n ingress-nginx ingress-nginx-controller --output jsonpath='{.status.loadBalancer.ingress[0].hostname}'

6. Note down the IP address and share with the customer to point their domain's A record to the IP address that we have got from above command.

Step 4: Deploy the EF-CX Solution

Please follow this link to gradually deploy solution on top your DO kubernetes cluster, except deploying Ingresses for the solution. These ingress routes will be added in next steps.

Step 5: SSL Certificates.

When using DO managed Kubernetes engine, its possible to use either of 3 different types of SSL certificates, depending upon the requirement.

1. Self-Signed or Commercial SSL Certificates

Self-signed and commercial SSL Certificates fall in the same category and exactly same steps can be followed as mentioned in the EF-CX deployment guide ( https://docs.expertflow.com/x/64KsCw). Once the solution is deployed , skip to the Deploy Ingress Routes section below.

2. LetsEncrypt SSL Certificate

Pre-requisites

-

FQDN to IP Resolution: This LE based SSL certificate provisioning requires that the FQDN is already pointing to the IP Address of the NGINX ingress controller service ( mentioned above ) and its resolvable across the globe so the LE authentication service is able to reach the service end serve the SSL certificate accurately.

-

NGINX Service Modification: This is required to work the LE based SSL certificate issuance, as the default NGINX controller service doesn't allow inter-pod communication when using the FQDN from within the cluster. Follow below given steps to resolve this problem.

LetsEncrypt NGINX Service Enhancement for DO Kubernetes Engine

1. Extract the current service manifest of the nginx ingress controller by running

k -n ingress-nginx get svc ingress-nginx-controller -o yaml > /tmp/nginx-svc.yaml

2. Add the below given annotation to the Service

service.beta.kubernetes.io/do-loadbalancer-hostname: "cloud.expertflow.com"

Please do not change anything else in this file. Just add the annotation mentioned above to already existing annotations.

`cloud.expertflow.com` is the example FQDN used for demonstration, change it to your FQDN.

3. Save the changes and apply

k apply -f /tmp/nginx-svc.yaml

3. Create LE based SSL Certificate

In order to use LE based SSL certificates, please follow these steps:

1. Make sure the cert-manager is installed in the cluster. verify the cert-manager deployment by running the following command:

# kubectl get pods --namespace cert-manager

the output will look like something below:

NAME READY STATUS RESTARTS AGE

cert-manager-578cd6d964-hr5v2 1/1 Running 0 99s

cert-manager-cainjector-5ffff9dd7c-f46gf 1/1 Running 0 100s

cert-manager-webhook-556b9d7dfd-wd5l6 1/1 Running 0 99s

However, if the cert-manager is not deployed, you can deploy it using steps mentioned in the EF-CX deployment guide ( https://docs.expertflow.com/x/64KsCw )

2. Create a Cluster Issuer Resource:

kubectl apply -f -<<EOF

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

namespace: cert-manager

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: nasir.mehmood@expertflow.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

# Enable the HTTP-01 challenge provider

solvers:

- http01:

ingress:

class: nginx

EOF

3. Verify the cluster Issuer status:

# kubectl get ClusterIssuer

NAME READY AGE

letsencrypt-prod True 18h

root@auto:~/DO#

the READY column will be True if the cert-manager is able to communicate and get the SSL certificate verified.

Step 6: Deploy Ingress Routes Let'sEncryptSSL

1. Add the below given annotation to add the ingress manifests in cim/Ingresses/nginx/*.yaml

cert-manager.io/cluster-issuer: "letsencrypt-prod"

you can use sed to add this annotation to all the ingress manifests.

For this to work programmatically, create a file e.g /tmp/nginx.temp with the above mentioned contents and proper spacing added before the line ( normally 4 white spaces are required ) , then issue below given command to add in all YAMLfiles.

step1:

# echo " cert-manager.io/cluster-issuer: \"letsencrypt-prod\"" > /tmp/nginx.temp

step-2:

# sed -i '/annotations\:$/ r /tmp/nginx.temp' cim/Ingresses/nginx/*

2. Apply all the ingresses:

kubectl apply -f cim/Ingresses/nginx/

3. When applying ingress manifests, warning messages like can be ignored safely.

Warning: path /360connector(/|$)(.*) cannot be used with pathType Prefix

ingress.networking.k8s.io/360connector created

Warning: path /agent-manager(/|$)(.*) cannot be used with pathType Prefix

ingress.networking.k8s.io/agent-manager created

Warning: path /bot-framework(/|$)(.*) cannot be used with pathType Prefix

ingress.networking.k8s.io/bot-framework created

Warning: path /business-calendar(/|$)(.*) cannot be used with pathType Prefix

ingress.networking.k8s.io/business-calendar created

Warning: path /ccm(/|$)(.*) cannot be used with pathType Prefix

4. Verify the Ingresses are assigned correctly and there is properly assigned FQDN to all the ingress. Below given is the list of ingresses for FQDN cloud.expertflow.com

# k get ing -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

ef-external ef-grafana <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

ef-external keycloak <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow 360connector <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow agent-manager <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow bot-framework <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow business-calendar <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow ccm <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow cim-customer <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow conversation-manager <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow customer-widget <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow default <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow facebook-connector <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow file-engine <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow instagram-connector <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow license-manager <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow realtime-reports <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow routing-engine <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow smpp-connector <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow team-announcement <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow twilio-connector <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow unified-admin <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow unified-agent <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow unified-agent-assets <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow web-channel-manager <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

expertflow web-widget <none> cloud.expertflow.com cloud.expertflow.com 80, 443 18h

5. Access your solution at https://{{FQND}}/ which should present the unified-admin page .

Step 7: Freeswitch Deployment

For FreeSwitch deployment, please follow steps as below

.1 Create a new VM ( droplet ) in the DO portal. The VM should be created separately from the DO managed Kubernetes cluster, as the kubernetes based deployments of the FreeSwitch are not yet available.

.2 Once the VM is ready, SSH into the IP address assigned to the VM and use the password that you provided during creation process of the VM (public key method is also available in case password is not used )

3. Follow the steps mentioned in FreeSwitch deployment Guide

References