Purpose

The purpose of this document is to describe the Kubernetes deployment platform, its pre-requisites and the system requirements to deploy and which distribution to choose.

Intended Audience

This document is intended for IT operations personnel and system administrators who want to deploy Expertflow CX using Kubernetes. Familiarity with computer network component networking and storage is preferable.

Introduction

A Kubernetes platform is one of the solution prerequisites. Expertflow is not responsible for managing or supporting the underlying Kubernetes distribution.

Any vanilla Kubernetes distribution can be chosen to deploy Expertflow CX. Expertflow CX has been tested on two distribution:

Each distribution and mode has been described separately for the intended audience.

Choosing a Distribution

It is recommended that solution should be deployed on one of the above mentioned compatible distributions.

K3s Distribution

K3s is a lightweight, open-source Kubernetes distribution designed for resource-constrained environments. It aims to provide a simplified installation process, reduced memory footprint, and optimized performance while maintaining full compatibility with the Kubernetes API. K3s is packaged as a single <70MB binary that reduces the dependencies and steps needed to install, run Kubernetes. The installation steps are given here in this document.

RKE2 Distribution

RKE2, also known as RKE Government, is Rancher's next-generation Kubernetes distribution. It is designed to simplify the installation, configuration, and management of Kubernetes clusters in production environments. RKE2 focuses on security, scalability, and ease of use, providing a streamlined approach to deploying and operating Kubernetes cluster. The installation steps are given here in this document.

MicroK8s

-MicroK8s is a lightweight Kubernetes distribution that is designed to run on local systems. Canonical, the open source company that is the main developer of MicroK8s, describes the platform as a “low-ops, minimal production” Kubernetes distribution. If you have ever wanted to set up a production-grade, high-availability Kubernetes cluster without having to deploy multiple servers or manage complex configurations, MicroK8s may be the distribution for you

KubeAdm

-Using kubeadm, you can create a minimum viable Kubernetes (k8s) cluster that conforms to best practices. In fact, you can use kubeadm to set up a cluster that will pass the Kubernetes Conformance tests. kubeadm also supports other cluster lifecycle functions, such as cluster upgrades.

Choosing a Mode

There are multiple modes for deployment using one of the above mentioned distributions such as:

-

Single-Node (Without HA)

- A Kubernetes Single-Node (without HA) configuration refers to setting up a Kubernetes cluster with only one control-pane (master node), without high availability (HA) features. In this configuration, there is a single control plane node that manages the cluster's operations, including scheduling, scaling, and orchestrating containerized applications. However, since there is no HA, if the master node fails, the entire cluster may become inaccessible.

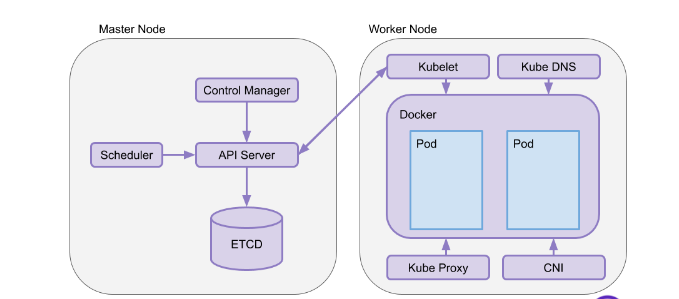

The architecture diagram of Single node (without HA) is given as follows:

-

Multi-Node (Without HA)

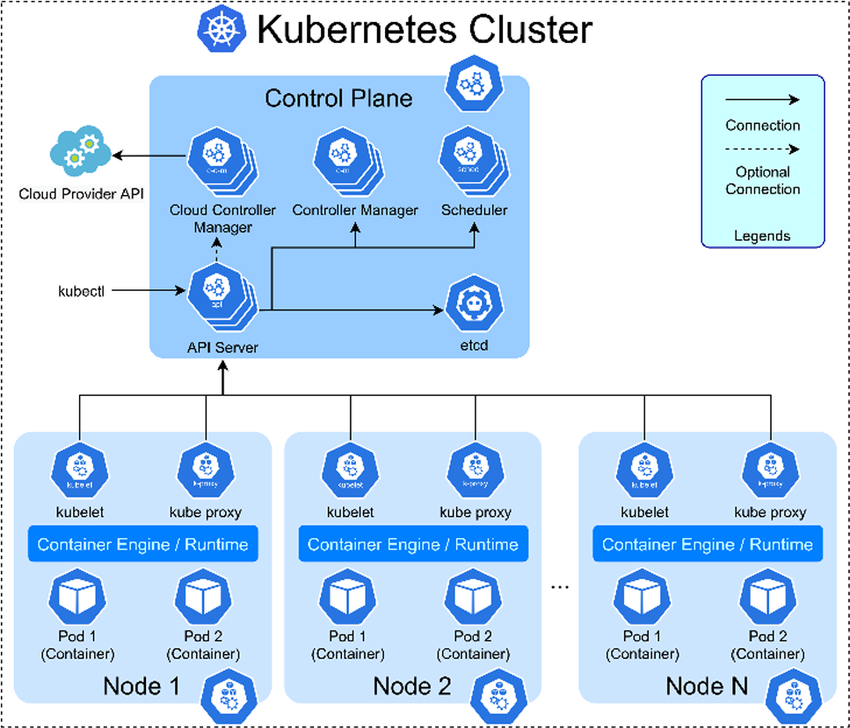

-A Kubernetes Multi-Node (without HA) configuration refers to setting up a Kubernetes cluster with multiple worker nodes and a single master node, but without high availability (HA) features. In this configuration, the master node serves as the control plane and manages the cluster, while the worker nodes execute the workload and run containerized applications. However, without HA, if the master node fails, the entire cluster's control plane becomes unavailable.

A multi-node configuration refers to multiple worker nodes managed by a single master node. The master node manages the cluster while worker nodes execute the workload and run containerized applications.

If the master node (control-plane) fails, worker nodes are orphaned and the cluster becomes unavailable.

A typical diagram of multi node configuration (without HA):

High Availability

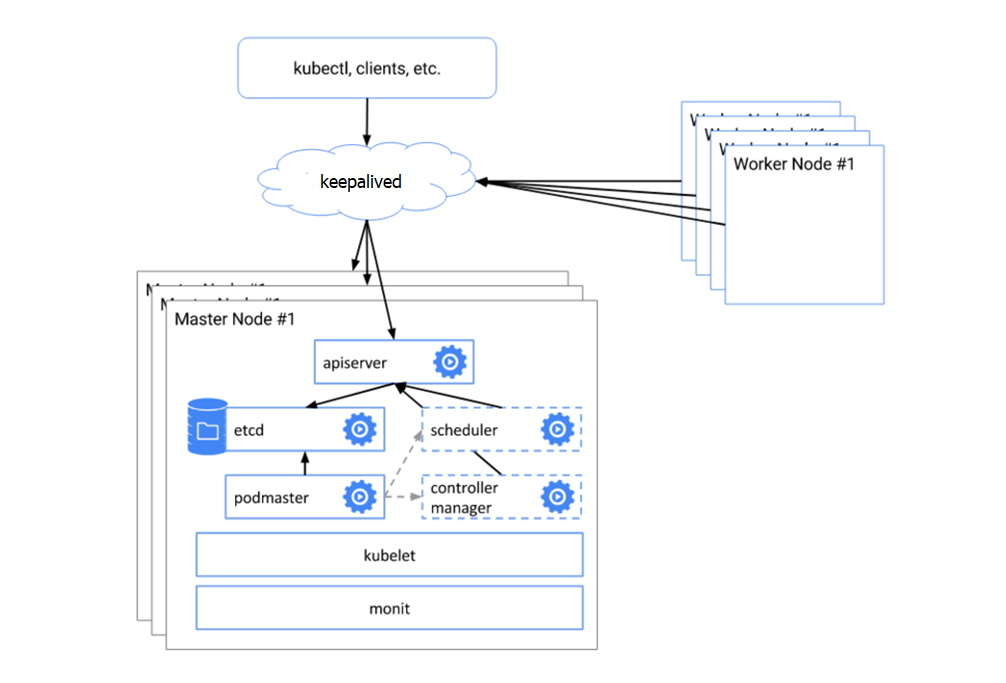

A Kubernetes High Availability (HA) configuration refers to setting up a cluster with redundant components to ensure continuous operation and resilience in the face of failures. In an HA setup, there are multiple master nodes, typically three or more, forming a control plane cluster. This cluster operates in an active-active or active-passive mode, where one or more nodes are actively serving traffic while others are ready to take over in case of failure.

The architecture diagram of High Availability is given as follows:

-

High Availability

- A Kubernetes High Availability (HA) configuration refers to setting up a cluster with redundant components to ensure continuous operation and resilience in the face of failures. In an HA setup, there are multiple master nodes, typically three or more, forming a control plane cluster. This cluster operates in an active-active or active-passive mode, where one or more nodes are actively serving traffic while others are ready to take over in case of failure.

Layered Networks Cluster

Cluster is provisioned with a separate networks for

-

Cluster traffic

-

POD Traffic

-

Storage Traffic

Please note this topology requires expertise to manage and maintain the cluster smoothly. An inefficient setup may result in poor performance and longer outage periods if something goes wrong.

dra

Choosing a Storage

There are two recommended options for choosing a storage:

-

Longhorn for Replicated Storage - This deployment model is for lighter scale cluster workloads and should be used with cautions that longhorn will require additional hardware specs for a production cluster. If this is only option, consider deploying the longhorn on dedicated only in the cluster using node-affinity.

-

OpenEBS (Local Volume Provisioning) - Deploying OpenEBS enables localhost storage as target devices and can only be used in below given scenariosDeployment of StatefulSets using nodeSelectors. In this deployment model, each statefulset is confined to a particular node so that it always be running on the same node. However, this inverses the High Availability concept of the statefulset services in such a way that when 1 worker node goes down, all StatefulSet services will not be available until the node recovers. Deploy StatefulSets in High-level replication and use local disks on each node. this deployment model gives the flexibility of having at least 3 nodes available with completes services.Details on OpenEBS can be read here.