Purpose

Geographical cluster is an enhancement over standard kubernetes deployment in high availability with Disaster Recovery (DR) spanning across multiple regions or locations. Currently Expertflow CX supports geographically spanned cluster with topologies as explained in the High Availability with DNS where the cluster is deployed in 3 different geographic regions ( also knows as sites ) . However, the same cluster can also be provisioned using High Availability using External Load Balancer and High Availability with Kube-VIP. Please refer to each URL to understand its basic set of requirements.

For brevity, region refers to the geographical location, especially in diagrams.

Multi-Master Kubernetes HA

In this deployment option, the Kubernetes HA cluster requires three geographical regions for the deployment of Expertflow CX.

Provided that:

-

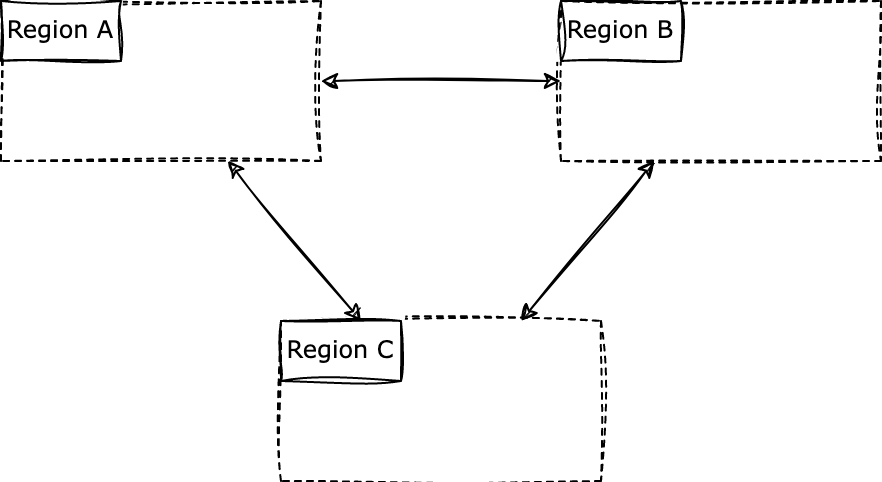

All three regions are connected to each other independently,

-

and in such a way that one link failure does not affect the connection of remaining two connections:

Minimum Quorum

One Kubernetes Control-Plane node is active in every geographical location/region (a minimum 3 in total) however, the minimum quorum requirements are follows

-

3 Control-Plane nodes are required for the N-1 quorum.

-

5 Control-Plane nodes are required for the N-2 quorum.

in case, the above quorum is not met, the cluster is considered as failed and needs to be either re-built from scratch or the failed Control Plane node requires a manual intervention to be added from scratch. refer to HA Control-Plane Node Failover

In a 3 site cluster, If two regions fail at the same time, kubernetes will suspend its services and the cluster has to be reconfigured or recreated from the backup.

Requirements

Below are some of the hard requirements for a geo deployment perspective.

-

stable ( possibly dual pathed ) network connectivity between all 3 regions.

-

a max of 10ms to 12 ms RTT is required for the cluster operations to work efficiently.

-

The network bandwidth with priority assigned to Kubernetes nodes so that the services do not get any delay in replication specially for (QOS based priority routing

Above given requirements are needed to achieve

-

Control Plane nodes must be in sync with each other for a consistent cluster information ( etcd cluster )

-

the applications running on kubernetes engine are able to synchronize ( mainly replication ) data across all the participating workload bearing pods.

-

in case of failure or DR, the failover is synchronous and spontaneous.

Architecture

The geographical cluster is just like a normal HA cluster with added availability spanning multiple locations ( or sites ), more specifically, where disaster recovery between multiple datacenters or regions is required. In this case, if one of the datacenters is down, the standby deployment will take over the workload of the same cluster. This is mentioned in greater details in Geographical Deployment of Expertflow CX with Redundancy how the solution is deploy and what other precautions are taken to shift the workload to standby site.

Currently 3 different DR based deployment models

-

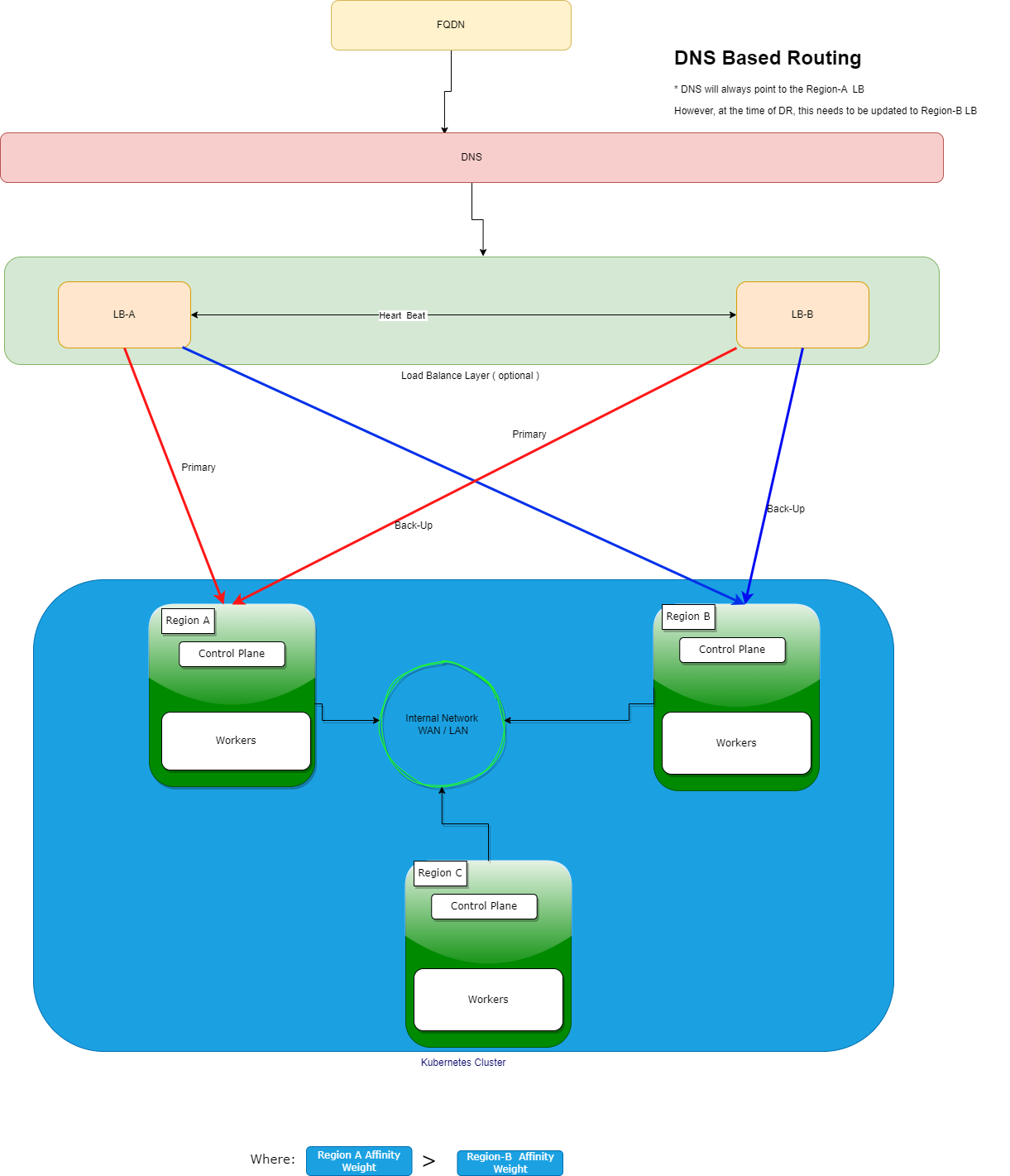

High Availability with DNS requires automatic DNS shifting to standby site.manual DNS shifting can also be used.

-

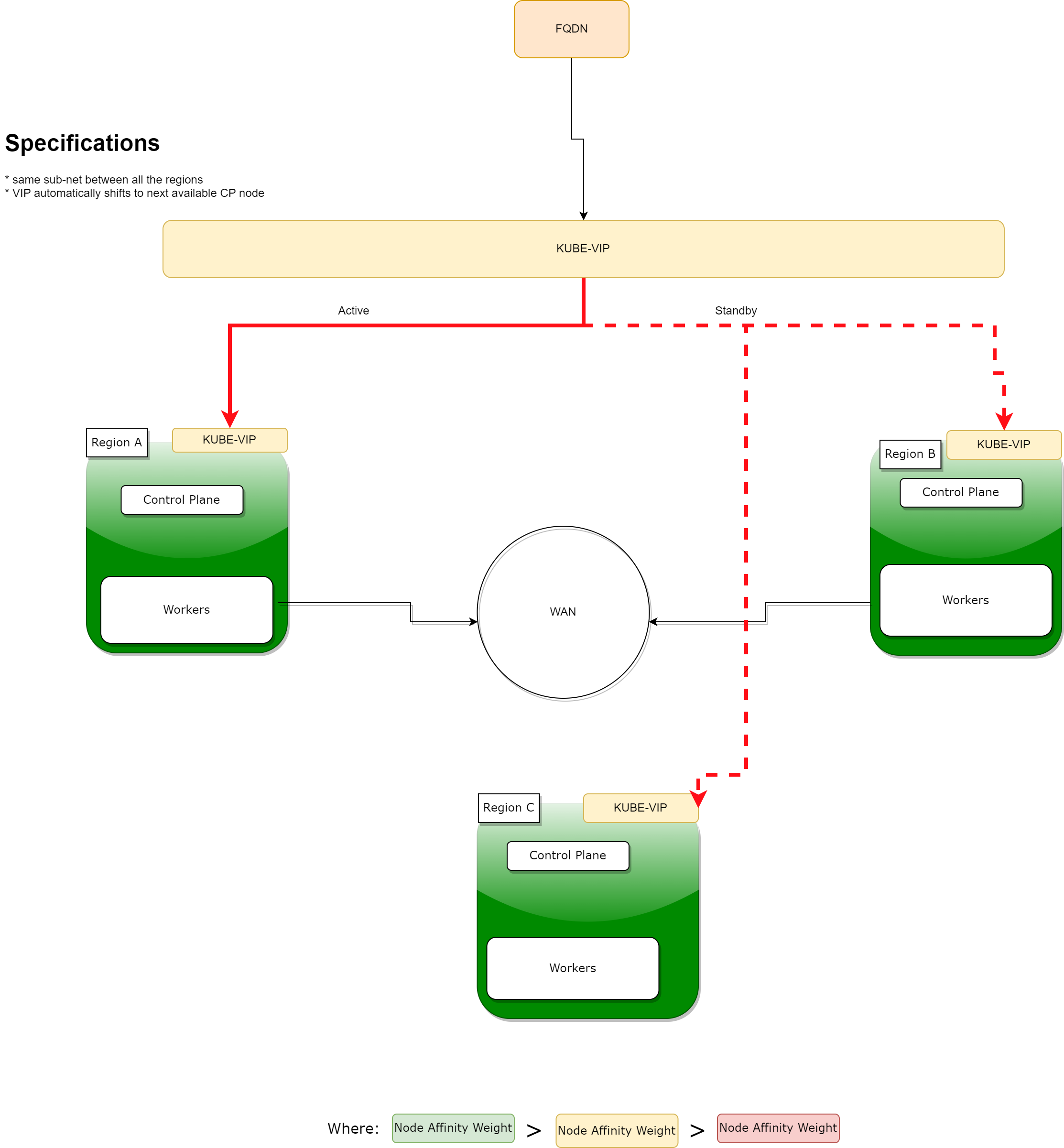

High Availability with Kube-VIP on Layer2 requires same subnet between all the controler nodesfailover to standby is instantaneous

-

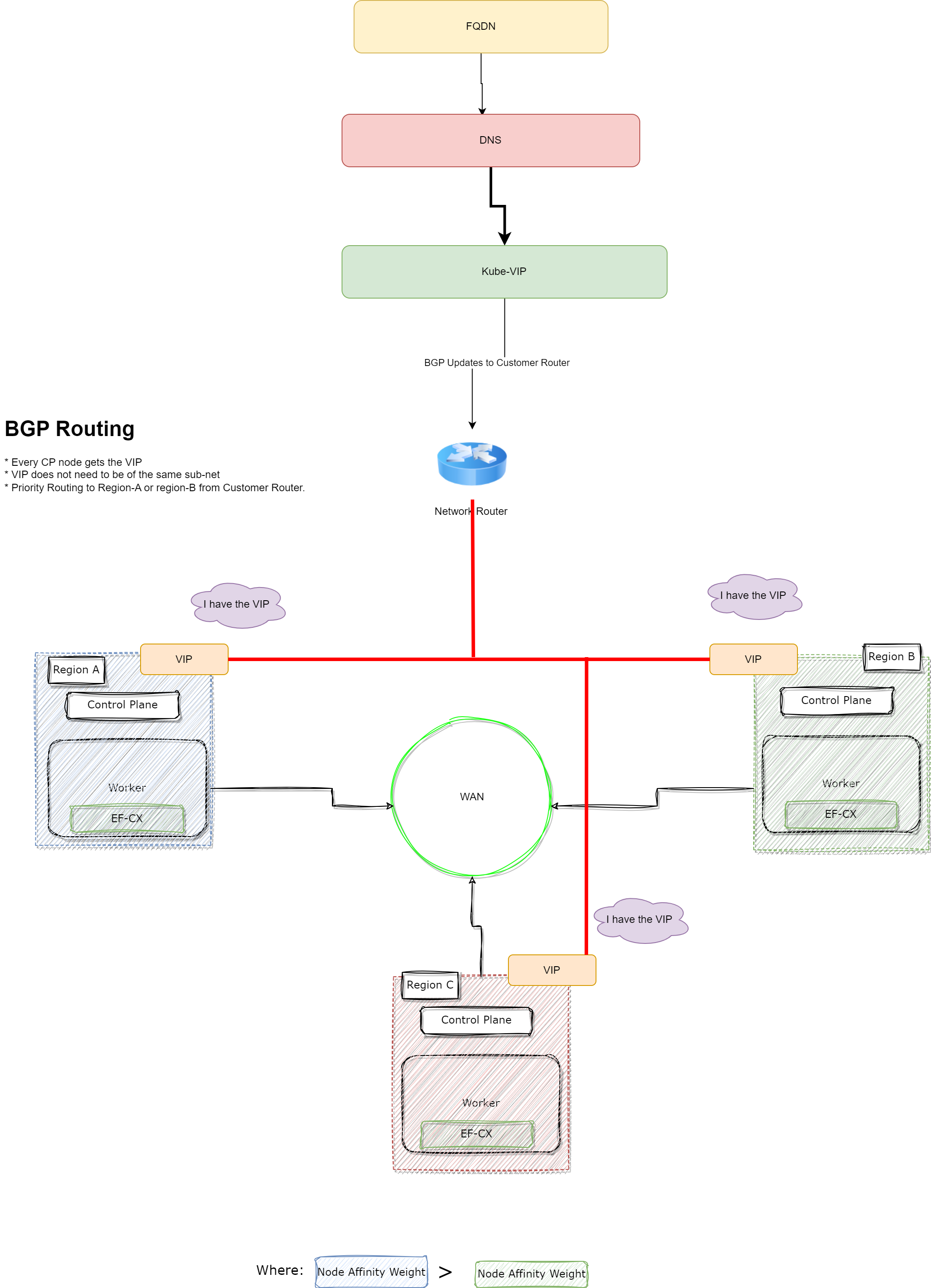

High Availability with with Kube-VIP using BGPrequires some advanced network topology of BGP routing works with different subnetsfailover to standby is instantaneous